This was answered in cross-validated and stackoverflow:

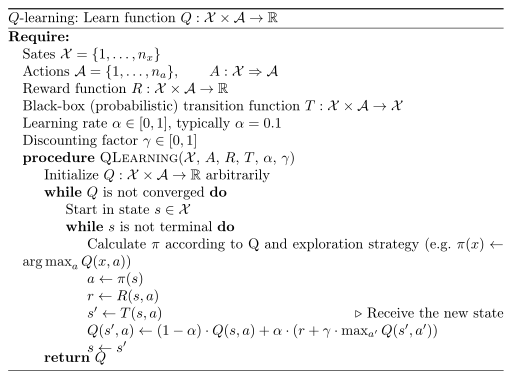

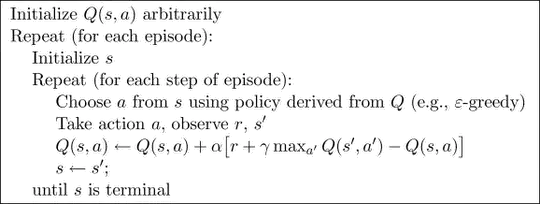

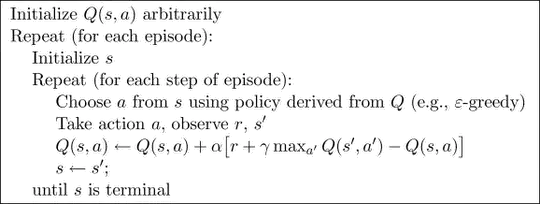

The reason that Q-learning is off-policy is that it updates its Q-values using the Q-value of the next state $s′$ and the greedy action $a′$. In other words, it estimates the return (total discounted future reward) for state-action pairs assuming a greedy policy were followed despite the fact that it's not following a greedy policy.

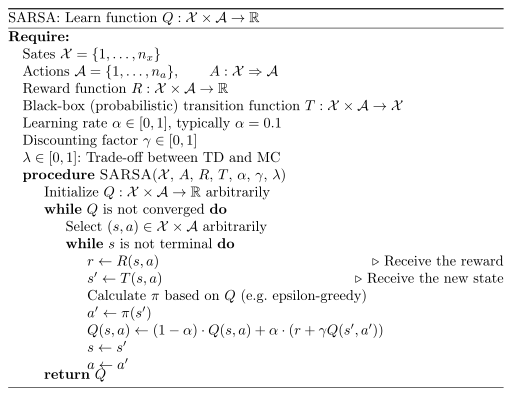

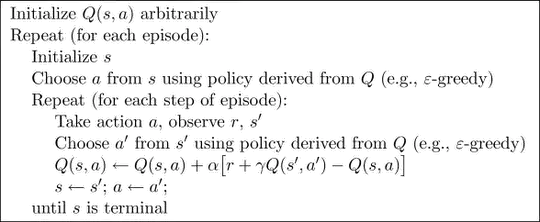

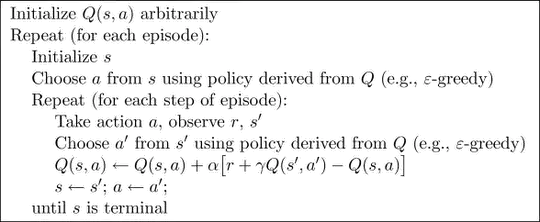

The reason that SARSA is on-policy is that it updates its Q-values using the Q-value of the next state $s′$ and the current policy's action $a′′$. It estimates the return for state-action pairs assuming the current policy continues to be followed.

These slides offer some insight on pros and cons of each one:

On-policy methods:

- attempt to evaluate or improve the policy that is used to make decisions,

- often use soft action choice, i.e. $\pi(s,a) >0, \forall a$,

- commit to always exploring and try to find the best policy that still explores,

- may become trapped in local minima.

Off-policy methods:

- evaluate one policy while following another, e.g. tries to evaluate the greedy policy while following a more exploratory scheme,

- the policy used for behaviour should be soft,

- policies may not be sufficiently similar,

- may be slower (only the part after the last exploration is reliable), but remains more flexible if alternative routes appear.

For reference, these are the formulations of Q-learning and SARSA from Sutton and Barto seminal book:

P.S.: I referenced and quoted the original answer from a different stackexchange site, as indicated in this meta question.