I am trying to build a model to estimate the ATE of Campaign B (B1) on CTR (Click-thru-Rate), with Campaign A as the baseline (B0), represented by column 'a_or_b'.

Other exogenous variables are: 'nth_day' (number of days elapsed for given campaign on a given day), 'platform_Meta', 'platform_StackAdapt' (both are binary, dummy variables with 'platform_Google' being represented in the baseline.

I'm a data science graduate student, and I have taken a causal inference course, however it mostly discussed the usage of OLS. It was very clear that OLS would not work well after an attempt.

After realizing that I was better off with a model that was bound by [0, 1] (100% is the max CTR possible), I figured I'd try my hand at a Beta Regression model using statsmodels.othermod.betareg.BetaModel.

Just for reference, here is the distribution of CTR. This is the result from df.describe() for more context about the data.

It's very skewed to the right, with most values being very small (but not 0).

I'm having a difficult time determining which model (if any of them) I've created is providing a decent enough fit for me to trust the coefficient and p-val of 'a_and_b'.

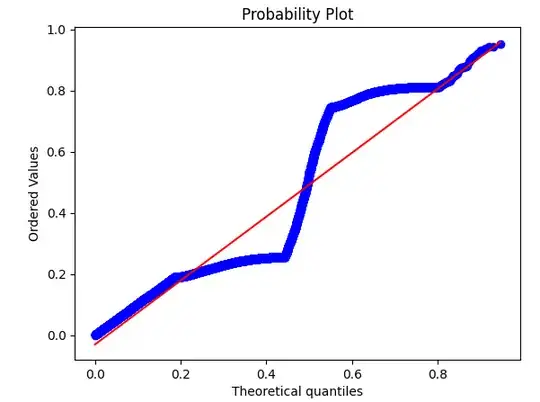

From what I understand, the lower AIC for Model 3 means it is better fit, however its QQ Plot and Residuals vs Fitted look very wonky and I don't trust the results.

The results from statsmodels for both models are here.

Model 2: QQ Plot, Residuals vs Fitted

Model 3: QQ Plot, Residuals vs Fitted

I'm looking for some insights and direction regarding where to take this. Feel free to also tell me if I am completely off track . I'm here to learn!