I think I have understood the DBScan algorithm for 2D data points. We can consider the example in scikit-learn. They generate a set of data points:

from sklearn.datasets import make_blobs

from sklearn.preprocessing import StandardScaler

centers = [[1, 1], [-1, -1], [1, -1]]

X, labels_true = make_blobs(

n_samples=750, centers=centers, cluster_std=0.4, random_state=0

)

X = StandardScaler().fit_transform(X)

The X and Y data points are:

print(X)

[[ 0.49426097 1.45106697]

[-1.42808099 -0.83706377]

[ 0.33855918 1.03875871]

...

[-0.05713876 -0.90926105]

[-1.16939407 0.03959692]

[ 0.26322951 -0.92649949]]

The parameters to be chosen for the DBScan algorithm are two: neighborhood radius ϵ and minimum number of samples. It works in this way: the algorithm starts from one random sample and calculates how many other samples fall within its neighborhood radius ϵ and generates a cluster if in the neighborhood radius there are at least the minimum number of samples.

So for the example above:

from sklearn.cluster import DBSCAN

db = DBSCAN(eps=0.3, min_samples=10).fit(X)

labels = db.labels_

import numpy as np

labels = np.unique(labels)

labels

Out[7]: array([-1, 0, 1, 2], dtype=int64)

It has found 3 clusters and some noise points.

Now, if I want to apply this in order to cluster groups in images, how can I do ?

I found this example here https://www.youtube.com/watch?v=wyk_vkL2os8. I tried to reproduce it using one image example that I found here in StackOverflow.

from sklearn.cluster import DBSCAN

from matplotlib.image import imread

import numpy as np

import matplotlib.pyplot as plt

#load image

image = imread('pZKmf.png')#take the image and convert into pixels using imread

print(image.shape)#(217, 386, 4)

plt.imshow(image)

The original image:

#convert it into two dimensional

#Flatten the image to create a 2D array of pixels

# you rescale in 2D array in order to have (features,samples)

X = image.reshape(-1,4)

print(X.shape) #(83762, 4)

#Apply the DBSCAN algorithm

dbscan = DBSCAN(eps=0.01, min_samples=500)

labels = dbscan.fit(X)

print(labels.shape) #(83762,)

unique_labels = np.unique(labels)

#it corresponds to the number of clusters

#array([-1, 0, 1, 2], dtype=int64)

#It has found 3 clusters

segmented_img = labels.reshape(image.shape[:2])

print(segmented_img.shape) #(217, 386)

plt.imshow(segmented_img)

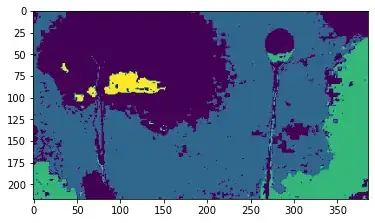

Here it is the segmented image:

In this example, it considers pixel intensities as features and we have 4 images which are the 4 samples.

I can not figure out which are the samples to cluster... I would consider pixels as samples to cluster. How can I understand how does clustering work in this case ?

EDIT: Thanks for the answers. Neural Network used for image embedding is interesting and I will for sure try to use this idea. However, I was asking for something that is more basic... how does clustering an image work ? What are samples ? What are features ? If DBScan consider a radius for the size of cluster, in which space do we have to consider it ? Besides DBScan, I am also a bit confused about the meaning of clustering an image.