Why don't we increase it to 128 and more?

You can do it, the number of filters of each convolutional layer can be seen as an hyperparameter. You can try to change it and see if the performance gets better or worse.

What's the point of having multiple filters in each convolutional layer? Each filter will hopefully compute a different representation of the input, that could be used by the following layer to obtain different and more high-level representation of the input (see also this answer).

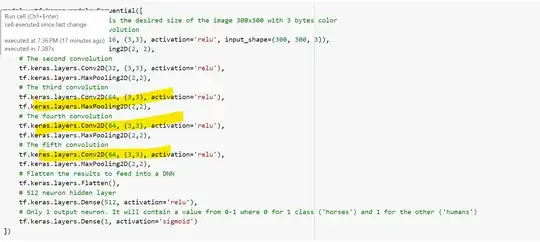

I'm curious why the last three conv2D layers are all 64

Why are those 64? Because the creator of the network chose them to be 64, according to some criteria (his knowledge, some experimentation, a neural architecture search ... )

and if these are redundant in terms of the model's performance.

Generally speaking, deeper models may compute a more detailed representation of the input and achieve a better performance if trained correctly (see for example VGG which does an extensive examination of the depth of a CNN). Whether they are redundant or not in a specific dataset should be evaluated by experimentation.