If you're using an RNN architecture as your encoder, say an LSTM or GRU, then the output of your encoder is the hidden-state representation of each time step in your input. So for each example that you feed the encoder of size [sequence_len, input_dims], the output of the encoder is a learned representation of that sequence of size [sequence_len, hidden_dims]. If you look at an illustration of the LSTM cell, you'll see that there actually is no output $\hat{y}$ like you might expect, only a hidden state (and cell state). If you do want to use this encoder output to make some kind of prediction, you need to have an additional linear layer or similar, since its hidden-space representation is not exactly useful for this.

Now imagine you do feed this entire hidden-state encoding to another RNN - then you don't really have an encoder-decoder so much as you just have a stacked RNN of multiple layers. The next RNN layer can possibly produce a different representation of the input, but it is nonetheless just another representation of the same input sequence. Presumably you want your decoder to output some different sequence - or what we might call a Seq2Seq task.

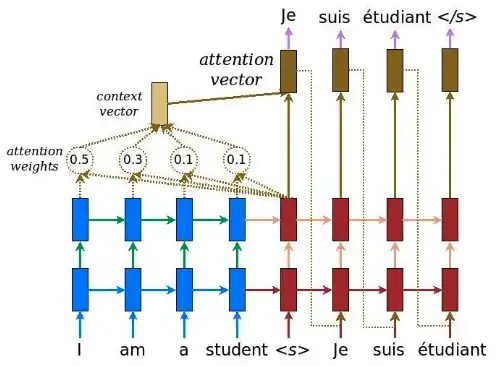

For a Seq2Seq task that you would want to use an encoder-decoder model for, you already know that we discard the first sequence_len - 1 hidden states and only feed the last one to the decoder. This last hidden state is also sometimes referred to as the "context". We can actually make an analogy for this with a common human task: imagine you have an exam question asking you to write a paragraph about a given topic. When reading the exam question, you're not remembering the exact order of the words to figure out what you have to write as the answer - I can write the same question a dozen different ways with slightly different wording but asking for the same thing. Instead, you are trying to extract the key parts of the sentence to figure out what it is that you need to give as a response. The encoder is trying to do a similar thing - figure out what the important bits of context are to pass that on to the decoder.

Essentially, we are trying to get the encoder to learn a way to represent everything relevant that has happened in the input sequence in this singular context vector of size [hidden_dims]. It then gives this context to the decoder, and it's the decoder's job to generate the appropriate output sequence for the given context - part of that is outputting individual time steps/words/what have you in an order that makes sense, which might not be at all related to the order of elements in the input sequence. For this reason, the encoder "output" that includes the hidden state at all its different time steps is not necessary!