I have a bunch of test measurements data and a semi-empirical model that has 18 parameters which I have to find so that the model fits my data well. So far I've managed to find and optimise the parameters using Optimisation and Global Optimisation algorithms in MATLAB.

Now I would like to explore different approaches for the parameter estimation. I have read some papers where the approach with NNs is described. I am new to NNs and have no idea if this is even possible.

I would create a two layer network with 18 input and output neurons. I am not sure what kind of transfer function would be appropriate for the problem.

The formula I have to fit and find the parameters look like this:

$ y = D \sin(C \arctan(Bx - E(Bx - \arctan(Bx))))$

where $B, C, D, E$ are macro-coefficients and the micro-coefficients are used to express the variation of each of the primary coefficients with respect to some other data.

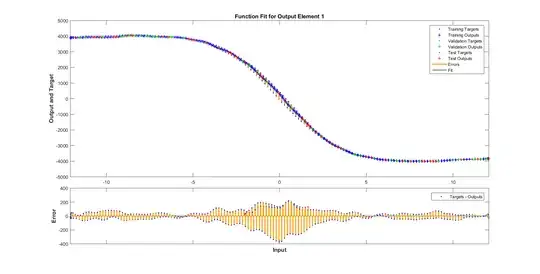

This is how my data looks like. How would you create network in MATLAB for this problem? Can you give me some hints and a push in the right direction to tackle this problem?

Thanks in advance.