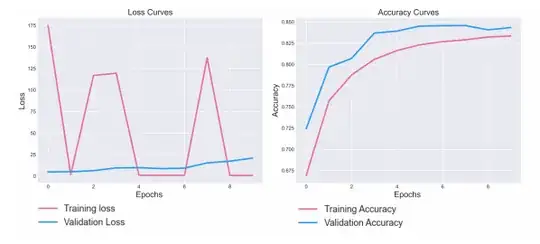

I have a problem. I have trained a model. And as you can see, there is a zigzag in the loss. In addition, the validation loss is increasing. What does this mean if you only look at the training curve? Is there an overfitting?

And the model does not generalise. Accuarcy on test and val is 0.84 and on the test 0.1. Does the assumption confirm the overfitting? And can overfitting come from the fact that I have trained too little? I only used two dense layers.