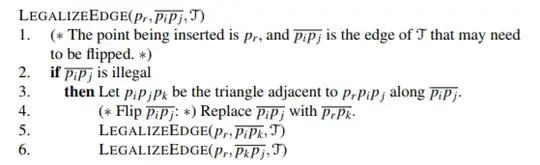

I am a math major without a strong background in theoretical computer science and I have to show that the worst case running time of the Delaunay algorithm is $O(n^2)$, when I know that the expected runtime is $O(n\log n)$. Can someone help me with this?

Asked

Active

Viewed 20 times