Some chap said the following:

Anyone who attempts to generate random numbers by deterministic means is, of course, living in a state of sin.

That's always taken to mean that you can't generate true random numbers with just a computer. And he said that when computers were the equivalent size of a single Intel 8080 microprocessor (~6000 valves). Computers have gotten more complex, and I believe that von Von Neumann's statement may no longer be true. Consider that an implemented software only algorithm is impossible. They run on physical hardware. True random number generators and their entropy sources are also made of hardware.

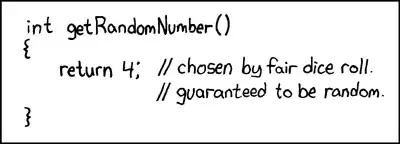

This Java fragment put into a loop:

file.writeByte((byte) (System.nanoTime() & 0xff));

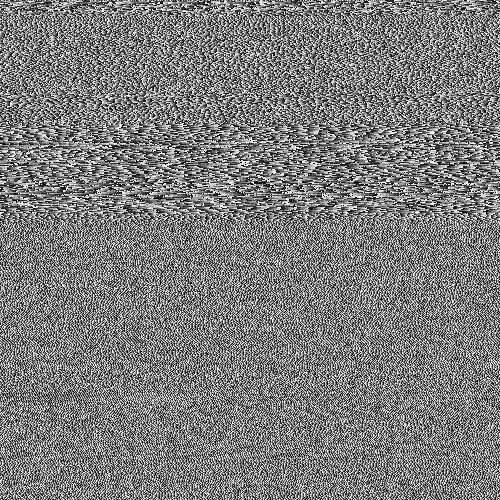

can create a data file which I've represented as an image:

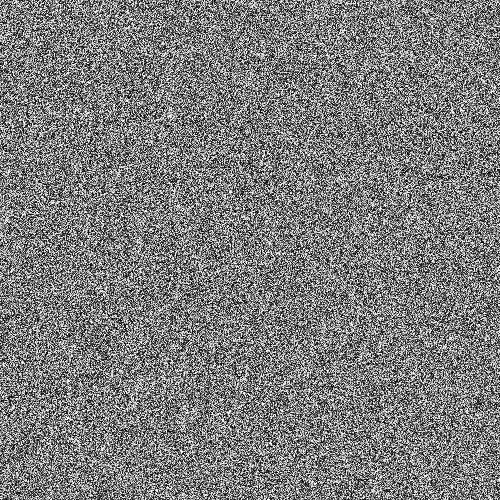

You can see structure, but with a lot of randomness as well. The thing of interest is that this PNG file is 232KB in size, yet contains 250,000 grey scale pixels. The PNG compression level was maximum. That's only a compression ratio of 7%, ie. fairly non compressible. What's also interesting is that the file is unique. Every generation of this file is a slightly different pattern and has similar ~7% compressibility. I highlight this as it's critical to my argument. That's ~7bits/byte entropy. That will reduce of course upon use of a stronger compression algorithm. But not reduce to anything near 0 bits/byte. A better impression can be had by taking the above image and substituting its colour map for a random one:-

Most of the structure (in the top half) disappears as it was just sequences of similar but marginally different values. Is this a true entropy source created by just executing a Java program on a multi taking operating system? Not a uniformly distributed random number generator, but the entropy source for one? An entropy source built of software running on physical hardware that just happens to be a PC.

Supplemental

In order to confirm that every image generates fresh entropy without a fixed pattern common to all, 10 consecutive images were generated. These were then concatenated and compressed with the strongest archiver I can get to compile (paq8px). This process will eliminate all common data, including auto correlation leaving only the changes /entropy.

The concatenated file compressed to ~66%, which leads to an entropy rate of ~5.3 bits/byte or 10.5Mbits /image. A surprising amount of entropy $ \smile $

Supplemental 2

There have been negative comments that my entropy by compression test methodology is flawed, only giving a loose upper bound estimate. So I've now run the concatenated file though NIST's official cryptographic entropy assessment test, SP800-90B_EntropyAssessment. This is as good as it gets for non IID entropy measurement. This is the report (sorry this question is getting long, but the issue is complex):-

Running non-IID tests...

Entropic statistic estimates:

Most Common Value Estimate = 7.88411

Collision Test Estimate = 6.44961

Markov Test Estimate = 5.61735

Compression Test Estimate = 6.65691

t-Tuple Test Estimate = 7.40114

Longest Reapeated Substring Test Estimate = 8.00305

Predictor estimates:

Multi Most Common in Window (MultiMCW) Test: 100% complete

Correct: 3816

P_avg (global): 0.00397508

P_run (local): 0.00216675

Multi Most Common in Window (Multi MCW) Test = 7.9748

Lag

Test: 100% complete

Correct: 3974

P_avg (global): 0.00413607

P_run (local): 0.00216675

Lag Prediction Test = 7.91752

MultiMMC Test: 100% complete

Correct: 3913

P_avg (global): 0.00407383

P_run (local): 0.00216675

Multi Markov Model with Counting (MultiMMC) Prediction Test = 7.9394

LZ78Y Test: 99% complete

Correct: 3866

P_avg (global): 0.00402593

P_run (local): 0.00216675

LZ78Y Prediction Test = 7.95646

Min Entropy: 5.61735

The result is that NIST believes that I have generated 5.6 bits/byte of entropy. My DIY compression estimate puts this at 5.3 bits/byte, marginally more conservative.

-> The evidence seems to support the notion that a computer just running software can generate real entropy. And that von Neumann was wrong (but perhaps correct for his time).

I offer the following references that might support my claim:-

Are there any stochastic models of non determinism in the rate of program execution?

WCET Analysis of Probabilistic Hard Real-Time Systems

Is there a software algorithm that can generate a non-deterministic chaos pattern? and the relevance of chaotic effects.

Parallels with the Quantum entropic uncertainty principle

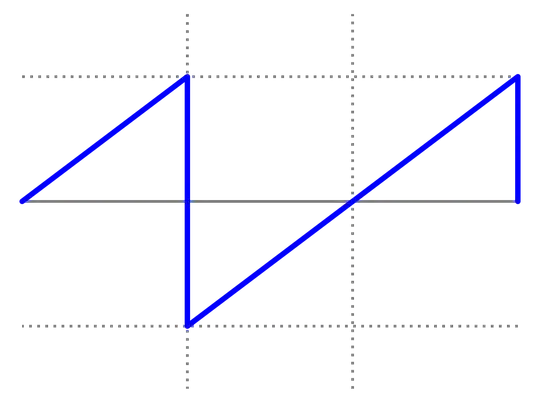

Aleksey Shipilёv's blog entry regarding the chaotic behaviour of nanoTime(). His scatter plot is not dissimilar to mine.