To try to test whether an algorithm for some problem is correct, the usual starting point is to try running the algorithm by hand on a number of simple test cases -- try it on a few example problem instances, including a few simple "corner cases". This is a great heuristic: it's a great way to quickly weed out many incorrect attempts at an algorithm, and to gain understanding about why the algorithm doesn't work.

However, when learning algorithms, some students are tempted to stop there: if their algorithm works correctly on a handful of examples, including all of the corner cases they can think to try, then they conclude that the algorithm must be correct. There's always a student who asks: "Why do I need to prove my algorithm correct, if I can just try it on a few test cases?"

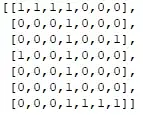

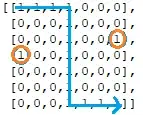

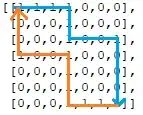

So, how do you fool the "try a bunch of test cases" heuristic? I'm looking for some good examples to show that this heuristic is not enough. In other words, I am looking for one or more examples of an algorithm that superficially looks like it might be correct, and that outputs the right answer on all of the small inputs that anyone is likely to come up with, but where the algorithm actually doesn't work. Maybe the algorithm just happens to work correctly on all small inputs and only fails for large inputs, or only fails for inputs with an unusual pattern.

Specifically, I am looking for:

An algorithm. The flaw has to be at the algorithmic level. I am not looking for implementation bugs. (For instance, at a bare minimum, the example should be language-agnostic, and the flaw should relate to algorithmic concerns rather than software engineering or implementation issues.)

An algorithm that someone might plausibly come up with. The pseudocode should look at least plausibly correct (e.g., code that is obfuscated or obviously dubious is not a good example). Bonus points if it is an algorithm that some student actually came up with when trying to solve a homework or exam problem.

An algorithm that would pass a reasonable manual test strategy with high probability. Someone who tries a few small test cases by hand should be unlikely to discover the flaw. For instance, "simulate QuickCheck by hand on a dozen small test cases" should be unlikely to reveal that the algorithm is incorrect.

Preferably, a deterministic algorithm. I've seen many students think that "try some test cases by hand" is a reasonable way to check whether a deterministic algorithm is correct, but I suspect most students would not assume that trying a few test cases is a good way to verify probabilistic algorithms. For probabilistic algorithms, there's often no way to tell whether any particular output is correct; and you can't hand-crank enough examples to do any useful statistical test on the output distribution. So, I'd prefer to focus on deterministic algorithms, as they get more cleanly to the heart of student misconceptions.

I'd like to teach the importance of proving your algorithm correct, and I'm hoping to use a few examples like this to help motivate proofs of correctness. I would prefer examples that are relatively simple and accessible to undergraduates; examples that require heavy machinery or a ton of mathematical/algorithmic background are less useful. Also, I don't want algorithms that are "unnatural"; while it might be easy to construct some weird artificial algorithm to fool the heuristic, if it looks highly unnatural or has an obvious backdoor constructed just to fool this heuristic, it probably won't be convincing to students. Any good examples?