$f(n) = 3n+3$ ;

$f(n) = O(n)$

By definition :

$3n+3 \leq c_1.n$

By dividing both side by $n$

$3+\frac{3}{n} \leq c_1$

means we are getting constant range for $c_1$ for any $n$. Again it shows $c_1$'s value must be greater than $3$ at any cost.

e.g. if we take $c_1$'s value 3.5 so $n$'s value will be $6$.

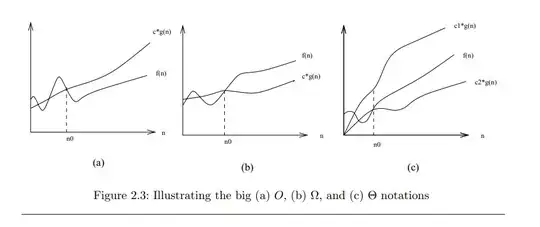

Now if we plot graph ( Because I want to learn this concept by understanding graph )

$c_1.g(n)$ graph goes below of $f(n)$ graph. I have taken following values for both functions :

$f(n)=3n+3$

$ \begin{matrix} n & f(n)\\ 1 & 6\\ 2 & 9\\ 3 & 12\\ -2 & -3 \end{matrix} $

for $g(n) = 3.5n$

$

\begin{matrix}

n & g(n)\\

1 & 3.5\\

2 & 7\\

3 & 10.5\\

-2 & -7

\end{matrix}

$

If we plot graph by these values it doesn't bind $f(n)$ i.e. $3n+3$ above by the value of $g(n)$ i.e. $3.5n$

Can anyone explain me this concept by graph ?