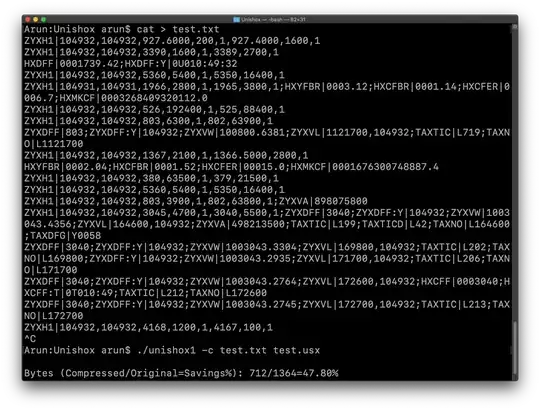

I'm exploring algorithms to compress small strings like the following - every line is to be compressed individually, i.e. even those small strings should get compressed:

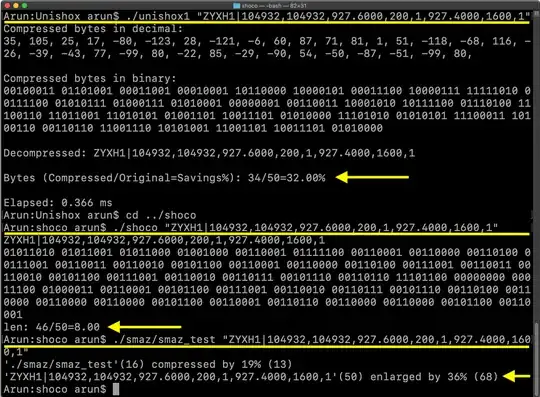

ZYXH1|104932,104932,927.6000,200,1,927.4000,1600,1

ZYXH1|104932,104932,3390,1600,1,3389,2700,1

HXDFF|0001739.42;HXDFF:Y|0U010:49:32

ZYXH1|104932,104932,5360,5400,1,5350,16400,1

ZYXH1|104931,104931,1966,2800,1,1965,3800,1;HXYFBR|0003.12;HXCFBR|0001.14;HXCFER|0006.7;HXMKCF|0003268409320112.0

ZYXH1|104932,104932,526,192400,1,525,88400,1

ZYXH1|104932,104932,803,6300,1,802,63900,1

ZYXDFF|803;ZYXDFF:Y|104932;ZYXVW|100800.6381;ZYXVL|1121700,104932;TAXTIC|L719;TAXNO|L1121700

ZYXH1|104932,104932,1367,2100,1,1366.5000,2800,1

HXYFBR|0002.04;HXCFBR|0001.52;HXCFER|00015.0;HXMKCF|0001676300748887.4

ZYXH1|104932,104932,380,63500,1,379,21500,1

ZYXH1|104932,104932,5360,5400,1,5350,16400,1

ZYXH1|104932,104932,803,3900,1,802,63800,1;ZYXVA|898075800

ZYXH1|104932,104932,3045,4700,1,3040,5500,1;ZYXDFF|3040;ZYXDFF:Y|104932;ZYXVW|1003043.4356;ZYXVL|164600,104932;ZYXVA|498213500;TAXTIC|L199;TAXTICD|L42;TAXNO|L164600;TAXDFG|Y0058

ZYXDFF|3040;ZYXDFF:Y|104932;ZYXVW|1003043.3304;ZYXVL|169800,104932;TAXTIC|L202;TAXNO|L169800

ZYXDFF|3040;ZYXDFF:Y|104932;ZYXVW|1003043.2935;ZYXVL|171700,104932;TAXTIC|L206;TAXNO|L171700

ZYXDFF|3040;ZYXDFF:Y|104932;ZYXVW|1003043.2764;ZYXVL|172600,104932;HXCFF|0003040;HXCFF:T|0T010:49;TAXTIC|L212;TAXNO|L172600

ZYXDFF|3040;ZYXDFF:Y|104932;ZYXVW|1003043.2745;ZYXVL|172700,104932;TAXTIC|L213;TAXNO|L172700

ZYXH1|104932,104932,4168,1200,1,4167,100,1

I have a couple of megabytes worth of data like the above, which allows me to create a static statistical model, i.e. for Huffman. If I have such a static model, I can embed that with the decompressor, removing the need to transmit/store it, i.e. trading compression rate for adaptability (important with these small strings).

I have now tried various algorithms, and the results of compressing those megabytes worth of strings (using a simple program that transmits the compressed data via TCP) are as follows:

Algorithm Rate Bytes/sec

--------------------------------

deflate optim 46.7% 3724917

deflate fast 44.2% 3831672

huffman 41.9% 11169462

lz4 1576 optim 25.6% 4334541

lz4 18576 fast 23.5% 42504338

lz4 1576 fast 23.4% 70996590

uncompressed 0% 105210881

So deflate is at the top of the list when it comes to the compression rate, (even for these small strings, where deflate does not rely on a shared static model) but it's 2 to 3 times slower than (my home-grown) Huffman coder. Given all the repeating character patterns in the input data, it would seem one could do much better than the Huffman coder which only looks at single bytes. LZ4 can be really fast with small buffer sizes (I only listed sizes of 1576 and 18576, Bytes/sec for bigger sizes drops significantly), but compression rate is not that good.

Are there simple ways to change / extend a per-byte Huffman coder to make use of the fact that there are many repeating (multi-byte) character patterns?

But it shouldn't grow the statistical model to thousands of entries (currently the model has at most 256 symbols, and merely changing from 1-grams to 2-grams would increase it to up to 32768), because in fact I do want to change and hence store/transmit the statistical model, but not for every string, but only every, say, 1 megabyte or so. So it would be somewhere between a static statistical model and a fully dynamic statistical model.