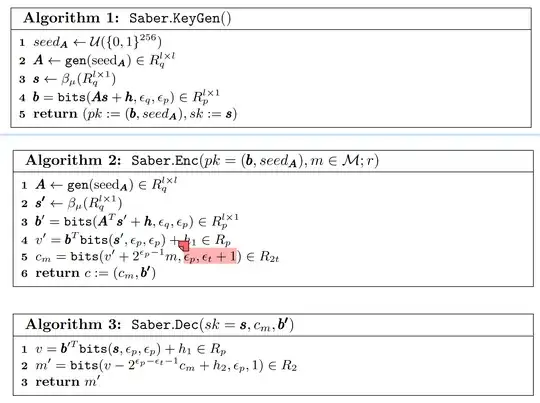

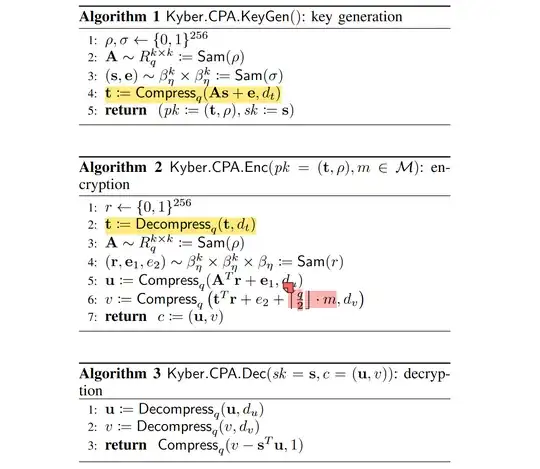

I'd like to discuss message bit layout in the Saber and $KYBER$'s IND-CPA encryptions.(Details of these two schemes follows behind these question paragraphs). From my understanding, both Saber and $KYBER$ somehow place the secret message to the highest bit of elements of vector:

- in Saber, the message bits $m$ are set at reconciliation info $c_m$'s highest bit, i.e. $c_m$'s $\epsilon_p$-th bit, hidden by $v^\prime$)

- and in $KYBER$ lays the secret bit into vector $v$, hidden with $t^Tr$.

In this process, both of them used rounding-like operations to get the right bit segments, Saber uses rounding of LWR, and $KYBER$ uses Compression-Decompression.

To me, Saber looks smarter because it gets rid of random error generation in the rounding process, while $KYBER$ seems to have "redundant" error addition besides its compressing and decompressing. But the results of NIST suggests that I might misunderstand this. So whether we can see $KYBER$ redundant? if so, to what extent does this redundance mean to security, or does it give $KYBER$ an advantage over Saber?

As a student quite new to post-quantum cryptography, I really appreciate any intuitions and corrections, thanks so much!

Appendix

Here are the two IND-CPA encryption scheme: