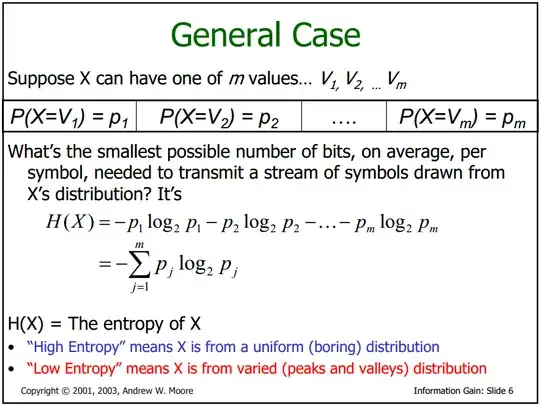

From this slide, it's said that the smallest possible number of bits per symbol is as the Shannon Entropy formula defined:

I've read this post, and still not quite understand how is this formula derived from the perspective of encoding with bits.

I'd like to get some tips like in this post, and please don't tell me that it's just because this is the only formula which satisfies the properties of a entropy function.

Thx in advance~