The cumulative distribution function of the standard normal distribution $\Phi(z)=\displaystyle\frac{1}{\sqrt{2\pi}}\int_{-\infty}^z e^{-t^2/2}dt$ cannot be expressed in terms of elementary functions, thus computing values is subject of numerical approximations (or iterating converging series up to a reasonable limit). Ditto for the inverse of it, quantile function or probit, $\text{probit}(\Phi(z))=\Phi^{-1}(\Phi(z))=z$. While there exist approximations for the later it may also be set up as root finding procedure for $\Phi^{-1}(\Phi(z))-z=0$.

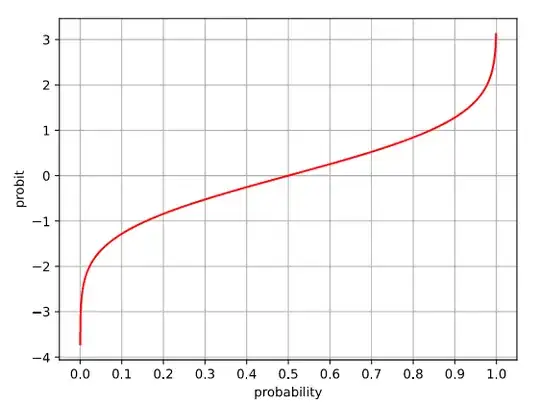

A look at the plot of the probit function...

... shows from $\Phi(z)=0.1$ to $0.9$ roughly a straight line. In this section Newton's method works perfectly. In contrast for the lower and upper ends an alternate root finder is more apt.

"Algorithm 39", Areas Under The Normal Curve, uses as approximation for the tails $\Phi(z)\approx\displaystyle\frac{1}{\sqrt{2\pi}}\exp\left (-\frac{z^2}{2}\right )\cdot f(z)$ where $f$ is a rational function.

Question: Which root finder would be the best for the outer sections of this approximation?

Short clarification: The question is not how to put on a procedure with erfc to compute $\Phi^{-1}$, I'm looking for an adequate root finder using a. m. "Algorithm 39".

Long clarification: "Algorithm 39" was published in 1969 when computing power was valuable enough to justify some effort in devising fast approaches, which comprises also the preparation of an iteration. So my query is, at that time, which algorithm based on "Algorithm 39" would work best for computing the quantile function's tails (that is $\Phi(z)$ above 0.9 and below 0.1)?

"Algorithm 39" computes for $z\ge 1.28$

$$\Phi(z)\approx\displaystyle\frac{p\cdot e^{\displaystyle -z^2/2}}{z+q+r}\ \ \ \text{with}\ r=\cfrac{b_1}{z+b_2+\cfrac{b_3}{z+b_4+\cfrac{b_5}{z+b_6+\cfrac{b_7}{z+b_8+\cfrac{b_9}{z+b_{10}}}}}}$$

and

$p=0.398942280385,\ q=-3.8052\cdot 10^{-8},\ b_1=1.00000615302,\ b_2=0.000398064794,\\\ b_3=1.98615381364,\ b_4=-0.151679116635,\ b_5=5.29330324926,\ b_6=4.8385912808,\\\ b_7=-15.1508972451,\ b_8=0.742380924027,\ b_9=30.789933034,\ b_{10}=3.99019417011$

What I found so far: not too much. While root-finder of higher-order (higher than Newton’s method) may work (iff the initial iteration value $x_0$ ensures $f(x_i) = 0$ to be an attractor), it might be overdone somehow when 10..12 digits accuracy is sufficient.

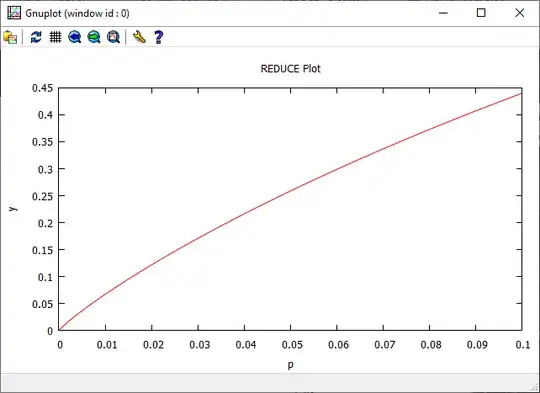

Playing a bit with the quantile or probit function $\Phi^{-1}(p)$ with $p\lt 0.1$, which when approaching $0$ is "diving down" quite fast (see diagram above), I found some "linearisation" (not in the mathematical sense) by conversion $y=\exp(-(\Phi^{-1}(p))^2/2)$, see (and compare with the plot above):

This leads to ...

This leads to ...

Additional questions: Could this conversion be helpful for computing $\Phi^{-1}$ by root-finding? How might an adequate iterative function look like? Are there examples which use a similar conversion to ease root-finding?