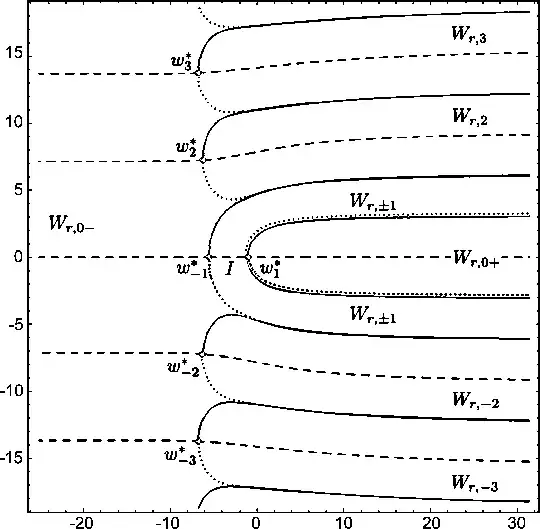

Imagine a continuous one-dimensional line, which is duplicated exactly once. Duplication starts at random spacetime points. Once a point is duplicated, it starts a double duplication wave moving in either direction at constant speed $v$ (similar to a bi-directional zipper), as the following animation suggests

$I(x,t)$ is defined as the probability the position $x$, if not already duplicated at time $t$, initiates a double wave at time $t$.

Consider now a large number of lines and define $s(x,t)$ as the fraction of lines where position $x$ has not yet been duplicated ($I(x,t)$ is the same across all lines). From this definition, we may extract the probability distribution of duplication timing.

First, note that a position $x$ is not duplicated at time $t$ iff its duplication timing $t_D(x)$ is greater than $t$. Therefore the probability distribution $P(x,t)$ of the duplication timing at position $x$ is given by $$ P(x,t)=-\partial_t s(x,t) $$ as $s(x,t)=\text{Prob}(t_D(x)\geq t)$.

Question: In my modelling approach, I define the term $f(x)$, which is the rate of initiation at position $x$, constant in time, which should be related to $I(x,t)$ in some form. I am interested in determining the expected time of duplication at a given position $x$, defined as $\langle t_D(x)\rangle$ and inverting the problem: is it possible to write the initiation rate $f(x)$ in terms of $\langle t_D(x)\rangle$? $\langle t_D(x)\rangle$ has also been discussed here, from a different perspective.

My attempt: For infinite and continuous lines, this problem has been studied in the context of nucleation and replication. In particular, it was shown that, using light-cone coordinates, $$ s(x,t)=e^{-\int_{V_X[v]}dYI(Y)} $$ where $V_X[v]$ is the past light cone of the spacetime point $X=(x,t)$. This equation has the following elegant inverse, using the D'Alembert operator $\square=\frac{1}{v^2}\partial_t^2-\partial_x^2$, $$ I(x,t)=-\frac{v}{2}\square \log s(x,t) $$ On the other hand, given the probabilistic description provided before, we also have $$ \langle t_D(x)\rangle=\int_0^\infty tP(x,t)\,dt=-\int_0^\infty t\partial_ts(x,t)\,dt $$ However, from here, it is not clear how to rewrite this so that I have the rate $f(x)$ as a function of $\langle t_D(x)\rangle$. In particular, how are $I(x,t)$ and $f(x)$ related? The general steps could potentially be:

- Write $s(x,t)$ in terms of $\langle t_D(x)\rangle$.

- Use D'Alembert formula to write $I(x,t)$ in terms of $\langle t_D(x)\rangle$.

- Write $f(x)$ in terms of $I(x,t)$.

- What happens in the discrete space case, where duplication only starts at specific points $\{x_i\}$ (say integers in the real line, for simplicity)? For instance, what happens when $I(x,t)\equiv\sum_{y\in\mathbb{Z}}\delta(x-y)I(x,t)$?

Any ideas?

Other general ideas:

- The inverse Laplace transform could prove useful to invert the previous equation, but not too sure how. Similarly, the Lambert W function could hold some useful information.

- Initiation is a Poisson process where only the first event matters (there is no re-duplication), therefore can conditionality be relaxed and is there a direct relation between initiation rate $f(x)$ and probability $I(x,t)$ over time? In particular, due to the constant speed, waves do not overlap and the expected time should be the same nonetheless.

- I am generally hoping for an elegant solution, but if not possible, where is the issue?