I am reading this paper where they use Matrix Factorization over Attention mechanism in their Hamburger model. In section 2.2.2 they say,

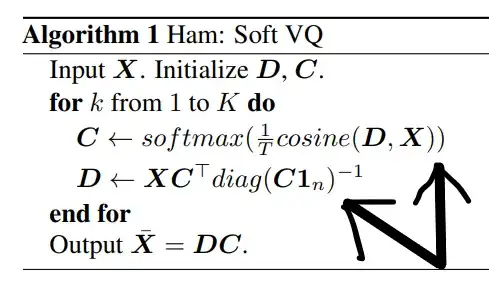

Vector Quantization (VQ) (Gray & Neuhoff, 1998), a classic data compression algorithm, can be formulated as an optimization problem in term of matrix decomposition: $$ \min _{\boldsymbol{D}, \boldsymbol{C}}\|\boldsymbol{X}-\boldsymbol{D} \boldsymbol{C}\|_F \quad \text { s.t. } \mathbf{c}_i \in\left\{\mathbf{e}_1, \mathbf{e}_2, \cdots, \mathbf{e}_r\right\}\tag1 $$ where $e_i$ is the canonical basis vector, $\mathbf{e}_i=[0, \cdots, 1, \cdots, 0]^{\top}$. The solution to minimize the objective in Eq. (1) is K-means (Gray & Neuhoff, 1998). However, to ensure that VQ is differentiable, we replace the hard arg min and Euclidean distance with softmax and cosine similarity, leading to Alg. 1, where $\operatorname{cosine}(\boldsymbol{D}, \boldsymbol{X})$ is a similarity matrix whose entries satisfy $\operatorname{cosine}(\boldsymbol{D}, \boldsymbol{X})_{i j}=\frac{\mathbf{d}_i^{\top} \mathbf{x}_j}{\|\mathbf{d}\|\|\mathbf{x}\|}$, and softmax is applied column-wise and $T$ is the temperature. Further, we can obtain a hard assignment by a one-hot vector when $T \rightarrow 0$.

I didn't understand the last line, "...and softmax is applied column-wise and $T$ is the temperature. Further, we can obtain a hard assignment by a one-hot vector when $T \rightarrow 0$." What they mean by $T$ (Temperature) here?

In fact, I couldn't get the justification for the replacement of $\arg \min$ with softmax here. And what is their update rule doing for $D\leftarrow XC^{T}\text{diag}(C1_n)^{-1}$

And it seems like their VQ is similar to traditional non-negative matrix factorization with regularization on $C$. Or am I confused between these two?

Thanks in advance.

update

I remove some questions which might be not going with M.SE rules. And convert the thread with a single math-based question only. Hope it will get better reach now.