Suppose Alice wants to send a message to Bob, they agree on a $n$ letters alphabet $\Omega = \{a_1, \cdots, a_n\}$ and they both agree on a shared secret $\omega=\omega_1 \cdots \omega_m$ $\omega_i \in \Omega \,\forall i$ that must be sent before the message. Unfortunately, Bob forgets the encoding of $\Omega$ and is only able to decode a message over a permuted alphabet $\sigma(\Omega) = \{\sigma(a_1), \cdots, \sigma(a_n)\}$ where $\sigma$ is a permutation of $\Omega$. If e.g. $\Omega$ are bits, it means that 0 and 1 could be flipped or not.

Suppose Alice wants to prove her identity to Bob, so she sends their shared secret $\omega$ to Bob, so Bob will decode the message $m := \sigma(\omega) = \sigma(\omega_1) \cdots \sigma (\omega_n)$. Bob will accept the message iff there exists a permutation $\tau$ s.t. $\tau (m) = \omega$.

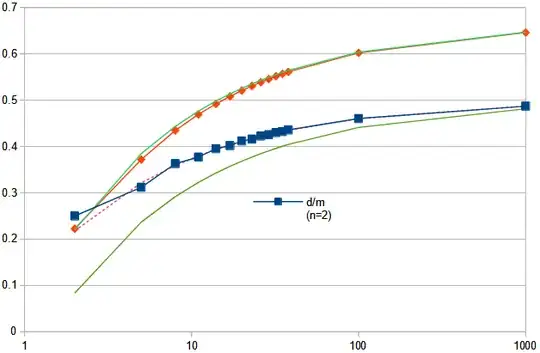

Now suppose that the channel over which Bob receives messages is noisy, Bob wants to know how close a message is close to a secret, so he computes: $$ d_H^S (m,\omega) := \min_{\tau} \left(d_H (\tau(m), \omega)= \sum_i d_H(\tau(m_i), \omega_i)\right) $$ and accept the message iff $d_H^S (m,\omega)$ is smaller than some threshold $C$. As an example if $\Omega=\{0,1\}$, $d_H^S(00,11) = 0$ (bits are flipped) while $d_H^S(00,10) = 1$ (whether or not bits are flipped, distance is one).

Now suppose Charlie wants to fake the identity of Alice, he then sends random messages to Bob. To compute the threshold $C$, the natural question emerges:

Question: What could be the average value of $d_H^S (a,b)$ between two random words $a$ and $b$ of $n$ letters?