The claim seems highly nontrivial, this integral definitely deserves more attention.

It is equivalent to prove

$$\int_0^\pi {{{\left[ {\frac{{\sin^a {{(ax)}}\sin^{1-a} {{((1 - a)x)}}}}{{\sin x}}} \right]}^b}} dx = \pi \frac{{\Gamma (1 - b)}}{{\Gamma (1 - b + ab)\Gamma (1 - ab)}}\qquad 0<a,b<1$$

Let $\alpha>0$, $x>0$, $-\pi/2<\varphi<\pi/2$. Consider

$$I=\Re \int_0^\infty \exp \left( {itx - {t^\alpha } {e^{i\varphi }}} \right)dt $$

Writing $t$ in polar coordinate $t = re^{i\theta}$, we have

$$\Im(itx - t^\alpha e^{i\varphi }) = rx\cos\theta - r^\alpha \sin(\alpha\theta+\varphi)$$

so (a portion of) $\{t\in \mathbb{C} \vert \Im(itx - t^\alpha e^{i\varphi})=0 \}$ can be parametrized by $$\tag{*}r(\theta) = {\left( {\frac{{ \sin (\alpha \theta + \varphi )}}{{x\cos \theta }}} \right)^{1/(1 - \alpha )}}$$

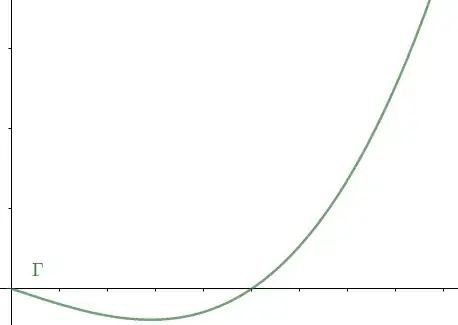

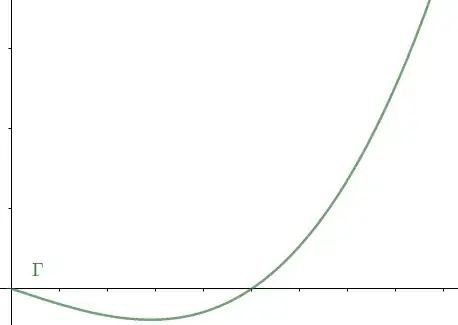

We shall choose $\alpha, \varphi$ so that the term inside the parenthesis is positive when $-\varphi/\alpha < \theta < \pi/2$. We will concentrate on the case $0<\alpha<1$. In this case, $r(\theta)$ travels from $0$ to $\infty$ as $\theta$ increases from $-\varphi/\alpha$ to $\pi/2$, let $$\Gamma = \{ re^{i\theta}| -\varphi/\alpha < \theta < \pi/2, r =r(\theta)\}$$

The integrand is decreasing fast enough at infinity to enable the deformation of integration path, giving

$$I=\Re \int_\Gamma \exp \left( {itx - {t^\alpha }{e^{i\varphi }}} \right)dt = \int_\Gamma \exp( \Re({itx - {t^\alpha } {e^{i\varphi }}})) d(\Re t)$$

the second equality holds because the exponential is real on $\Gamma$. Let $$\mathcal{K}(\alpha,\varphi,\theta) = \frac{{{{( {\sin (\alpha \theta + \varphi )} )}^{\alpha /(1 - \alpha )}}\cos ((\alpha - 1)\theta + \varphi )}}{{{{(\cos \theta )}^{1/(1 - \alpha )}}}}$$

Using parametrization $(*)$, one calculates (details omitted)

$$\begin{aligned}&\Re({itx - {t^\alpha } {e^{i\varphi }}}) = -rx \sin\theta - r^\alpha \cos(\alpha\theta+\varphi) = - {x^{\alpha /(\alpha - 1)}}\mathcal{K}(\alpha,\varphi,\theta) \\ &d(\Re t) = d(r\cos\theta) = \frac{\alpha }{{1 - \alpha }}{x^{ - 1/(1 - \alpha )}}\mathcal{K}(\alpha,\varphi,\theta) d\theta \end{aligned}$$

Therefore $$\tag{**}\Re \int_0^\infty {\exp \left[ {itx - {t^\alpha }{e^{i\varphi }}} \right]dt} = \frac{\alpha }{{1 - \alpha }}{x^{1/(\alpha - 1)}}\int_{ - \varphi /\alpha }^{\pi /2} {\exp \left[ { - {x^{\alpha /(\alpha - 1)}}\mathcal{K}(\alpha,\varphi,\theta)} \right]\mathcal{K}(\alpha,\varphi,\theta)d\theta } $$

The choice of contour $\Gamma$ is adapted from Zolotaryov's One-dimensional stable distributions p. 74-77. $(**)$ is crucial for me to derive the desired integral.

$(**)$ is valid for $x>0$, replace $x$ by $x^c$ ($c>1$), then apply $\int_0^\infty dx$ both sides (easily justified):

$$\Gamma (\frac{1}{c})\Re \int_0^\infty {{{( - it)}^{ - 1/c}}\exp ( - {t^\alpha }{e^{i\varphi }})dt} = \Gamma \left( {\frac{{ - 1 + \alpha + c}}{{\alpha c}}} \right)\int_{ - \varphi /\alpha }^{\pi /2} {{{\mathcal{K}(\alpha,\varphi,\theta)}^{1 - \frac{ - 1 + \alpha + c}{\alpha c}}}d\theta } $$

Let $\beta = (1-c)/(\alpha c)$ (so $\beta < 0$), then LHS of above displayed equation equals

$$\tag{1}\Gamma (1 + \alpha \beta )\frac{{\Gamma ( - \beta )}}{\alpha }\cos (\frac{\pi }{2}(1 + \alpha \beta ) + \varphi \beta )$$ while RHS is $$\tag{2} \Gamma \left( {1 - \beta + \alpha \beta} \right) \int_{ - \varphi /\alpha }^{\pi /2} {\frac{{{{ {\sin^{\alpha\beta} (\alpha \theta + \varphi )} }}{{\cos }^{(1 - \alpha )\beta}}((\alpha - 1)\theta + \varphi )}}{{{{\cos^\beta \theta }}}}d\theta } $$

We proved the equality $(1) = (2)$ under the following hypotheses:

- $0<\alpha<1, \beta<0, \alpha\beta>-1$

- $-\pi/2<\varphi<\pi/2$ such that all exponentials in $(2)$ has positive base throughout range of integration.

In particular, this is true for $\varphi = \pi \alpha/2$,

$$\begin{aligned}&\quad \int_{ - \pi /2}^{\pi /2} {\frac{{{{\sin }^{\alpha \beta }}(\alpha \theta + \frac{\pi }{2}\alpha ){{\cos }^{(1 - \alpha )\beta }}((\alpha - 1)\theta + \frac{\pi }{2}\alpha )}}{{{{(\cos \theta )}^\beta }}}d\theta } = \int_0^\pi {\frac{{{{\sin }^{\alpha \beta }}\alpha \theta {{\sin }^{(1 - \alpha )\beta }}((1 - \alpha )\theta ))}}{{{{\sin^\beta \theta } }}}d\theta } \\

& = \frac{{\Gamma (1 + \alpha \beta )}}{{\Gamma (1 - \beta + \alpha \beta )}}\frac{{\Gamma ( - \beta )}}{\alpha }\cos (\frac{\pi }{2} + \pi \alpha \beta )\end{aligned}$$

completing the proof.