Also called stochastic matrix. Let

$A=[a_{ij}]$ - matrix over $\mathbb{R}$

$0\le a_{ij} \le 1 \forall i,j$

$\sum_{j}a_{ij}=1 \forall i$

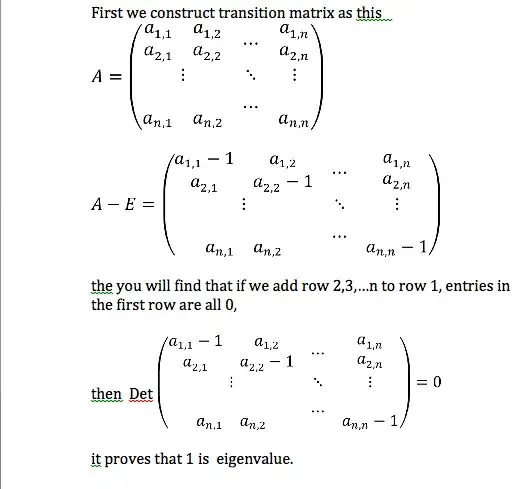

i.e the sum along each column of $A$ is 1. I want to show $A$ has an eigenvalue of 1. The way I've seen this done is that $A^T$ clearly has an eigenvalue of 1, and the eigenvalues of $A^T$ are the same as those of $A$. This proof, however, uses determinants, matrix transposes, and the characteristic polynomial of a matrix; none of which are particularly intuitive concepts. Does anyone have an intuitive, alternate proof (or sketch of proof)?

My goal is to intuitively understand why, if $A$ defines transition probabilities of some Markov-chain, then $A$ has an eigenvalue of 1. I'm studying Google's PageRank algorithm.