Currently I am studying logistic regression.

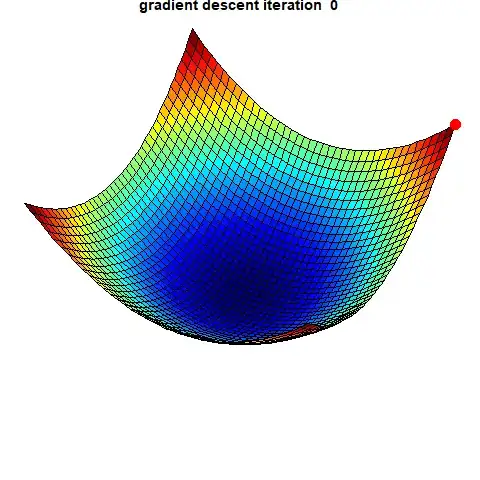

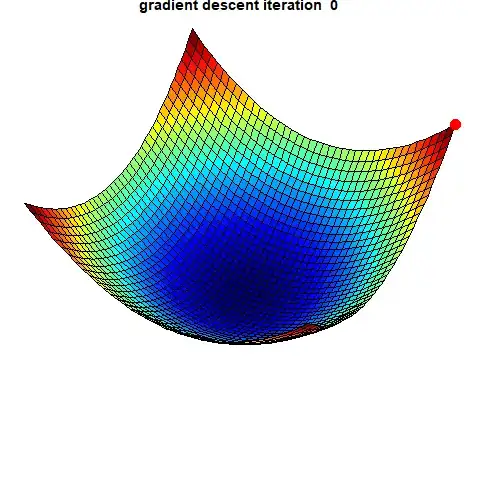

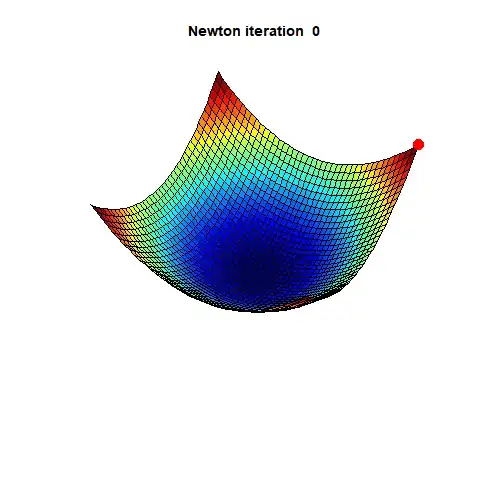

I read online that Gradient Descent is a 1st-order optimisation algorithm, and that Newton's Method is a 2nd order optimisation algorithm.

Does that mean that Gradient Descent cannot be used for multivariate optimisation and that Newton's Method cannot be used for univariate optimisation? Or can Newton's Method be done using a 1st order Taylor polynomial and still be different from Gradient Descent?

These sites are causing me to question:

- Univariate Newton's Method http://fourier.eng.hmc.edu/e176/lectures/NM/node20.html

- Multivariate Gradient Descent http://fourier.eng.hmc.edu/e176/lectures/NM/node27.html