I'm solving the diffusion equation

$$\frac{\partial u}{\partial t}=D\frac{\partial^2u}{\partial^2x}$$

subject to the BCs $\partial_xu(x=0)=0$ and $u(x)=1$, using the Crank Nicolson scheme. For the middle points I'm using

$$\frac{u_i^{n+1}-u_i^n}{\Delta t}=\frac{1}{2}D\frac{u_{i+1}^n-2u_i^n+u_{i+1}^n}{\Delta x^2}+\frac{1}{2}D\frac{u_{i+1}^{n+1}-2u_i^{n+1}+u_{i+1}^{n+1}}{\Delta x^2},$$

whereas in the left point, I make use of the fact that $\frac{u_1-u_{-1}}{2\Delta x}=0\implies u_1=u_{-1}$ and thus write

$$\frac{u_0^{n+1}-u_0^n}{\Delta t}=\frac{1}{2}D\frac{2(u_{1}^n-u_0^n)}{\Delta x^2}+\frac{1}{2}D\frac{2(u_{1}^{n+1}-u_0^{n+1})}{\Delta x^2}.$$

When an initial condition $u(0,x)$ is given, I can also find the analytical solution of this equation and thus compare the simulation with theory.

I am evaluating the absolute error $||\mathbf{T_{simul}}-\mathbf{T_{anal}}||$ at each time $t$.

When $t\rightarrow\infty$, the solution goes to zero with machine precision, which makes sense.

Now let's say I pick up a time $t$, when heat is still diffusing, and evaluate the error, and then I make the same thing for a different time-step $\Delta t$.

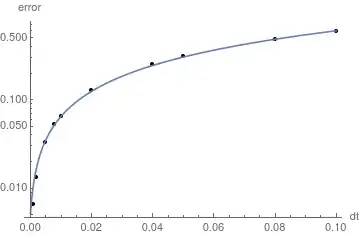

The resultant log-plot of the error with $\Delta t$ is the following:

The points fit very well with a linear function, but because this is a second-order scheme in $\Delta t$, I was expecting that the error would go with $(\Delta t)^2$ and not $\Delta t$!

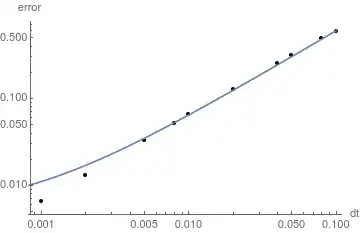

EDIT: As suggested in the comments, the log-log plot is:

(This fit is made to a function of the type $a+b(\Delta t)^2$. I have been told however that the fit should not contain the constant term.)

FINAL EDIT:

With the help of @LutzL I have realized I was making one mistake in my code, namely I was comparing theory and simulation at times separated precisely by $\Delta t$.

Besides that, I have found the following very interesting results:

1) If I use as initial condition

$$u(x,0)=1.0+\cos\left(\frac{\pi}{2}x\right),$$

which gives the exact solution (with $D=1$)

$$u(x,t)=1.0+e^{-\pi^2t/4}\cos\left(\frac{\pi}{2}x\right)$$

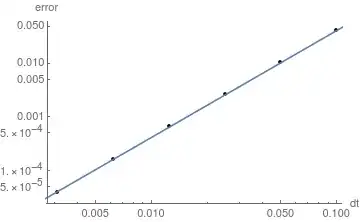

in the domain $x\in[0,1]$, I get that the error indeed goes with $(\Delta t)^2$:

The blue line gives the fit $4.106(\Delta t)^2$

2) Now if I use as initial condition

$$u(x,0)=1.0+\cos\left(\frac{x}{2}\right),$$

which gives the exact solution (with $D=1$)

$$u(x,t)=1.0+e^{-t/4}\cos\left(\frac{x}{2}\right)$$

in the domain $x\in[0,\pi]$, I get the following trend for the error:

where the blue line is the fit $0.00135462 + 0.00283811x + 0.0239431x^2$

It is strange that the simple change of variable $\pi x\rightarrow x$ makes this behaviour. Note also that in both cases 1) and 2) I have kept $\Delta r=0.001$ fixed.