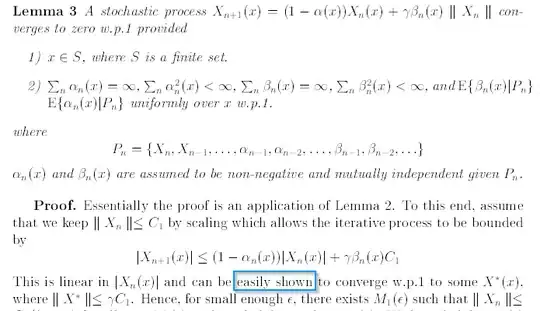

In the paper On the Convergence of Stochastic Iterative Dynamic Programming Algorithms (Jaakkola et al. 1994) the authors claim that a statement is "easy to show". Now I am starting to suspect that it isn't easy and they might have just wanted to avoid having to show it. But I thought I would post this to see if I missed something obvious.

What I have so far:

- Since $X_n$ is bounded in a compact interval it certainly has convergent subsequences

- $|X_{n+1}-X_n|\le (\alpha_n+\gamma \beta_n)C_1$ which implies that it converges to zero, but that isn't enough for it to be a Cauchy sequence even with the first statement

- $\lim\inf X_n\ge 0$ $$ \begin{align} X_{n+1}(x)&=(1-\alpha_n(x))X_n(x) + \gamma\beta_n(x)\|X_n\| \\ &\ge (1-\alpha_n(x))X_n(x)\\ &=\prod^n_{k=0}(1-\alpha_k(x))X_0 \to 0 \end{align}$$ since $\sum \alpha_n=\infty$ (c.f. Infinite Product).

- Because of $\alpha_n(x) \to 0$ we know that $(1-\alpha_n(x))\ge 0$ for almost all $n$, and if $X_n(x)\ge 0$ then $$ X_{n+1}(x) = \underbrace{(1-\alpha_n(x))}_{\ge 0} \underbrace{X_n(x)}_{\ge 0} +\underbrace{\gamma\beta_n(x)\|X_n\|}_{\ge 0} $$ thus if one element of the sequence is positive all following elements will be positive too. The sequences which stay negative converge to zero ($\lim\inf X_n\ge 0$). The other sequences will be positive for almost all n.

- For $\|X_n\|$ not to converge $\|X_n\|=\max_x X_n(x)$ for an infinite amount of n. If it was equal to the maximum of the negative sequences for almost all n it would converge. $$\|X_n\|=\max_x -X_n(x) \le \max_x - \prod_{k=0}^n (1-\alpha_k) X_0 \to 0 $$

- If we set $\beta_n=0$ we have $$X_m=\prod_{k=n}^{m-1} (1-\alpha_k)X_n \to 0$$ So my intuition is: since $\beta_n$ is smaller than $\alpha_n$ (on average) replacing $\beta_n$ with $\alpha_n$ should probably be fine, since you introduce a larger difference to zero. So I think going in the direction $$X_{n+1}\sim (1-\alpha_n)X_n +\gamma \alpha_n X_n = (1-(1-\gamma)\alpha_n)X_n$$ Which is fine since $\sum(1-\gamma)\alpha_n =\infty$ for $\gamma\in(0,1)$

But I still need to formalize replacing $\beta_n$ with $\alpha_n$ which only works if I take the expected value. And I don't know if the expected value leaves the infinite sums intact. I also have to justify replacing the norm with just one element. I think I can assume that the norm is the max norm without disrupting later proofs. And since $\lim\inf X_n\ge 0$, $|X_n|$ is basically equal to $X_n$.

I am also a bit confused since the approach I am currently following would show that it converges to 0 instantly while the proof wants me to show that it converges to some $X^*$ and then continuous with arguments on how to show that it converges to $0$ from there. Which makes me think, that I am not on the "intended proof path". So maybe I am missing something obvious which could save me a lot of trouble. Especially since they claim it should be easy.