This is not a complete answer.

Please refer also to my answers here and here for details where I show that

$$

\frac1{h^n}\Delta_h^n[f](x) = \int_0^{nh} D^n[f](x+t) \sigma_n(t) \,dt

$$

where $\sigma_n$ is the probability density function of $S_n=X_1+\dotsb+X_n$ and $X_1,\dotsc,X_n\sim\mathrm{Uniform}(0,h)$ are independent. $\sigma_n$ is a rescaling of the Irwin–Hall distribution (refer to here for a derivation of the PDF).

The expression that you wrote is simply

$$

\sum_{k=0}^{n} (-1)^k \binom{n}{k} f\!\left(a+\frac{k(b-a)}{n}\right)

= (-1)^n \Delta_{\frac{b-a}n}^n[f](a)

= \left(\frac{a-b}n\right)^n \int_a^b f^{(n)}(t) \rho_n(t) \,dt

$$

where $\rho_n$ is the PDE of $R_n=\frac{X_1+\dotsb+X_n}n$ and $X_i\sim\mathrm{Uniform}(a,b)$ are independent (related to Bates distribution).

From the characterization of analytic functions, if $f\in C^\infty(\mathbb R)$ is analytic, then

$$

\lVert f^{(n)} \rVert_{L^\infty(a,b)} \leq C_{a,b}^n n!

$$

Because of the obvious monotonicity of the constant $C_{a,b}$, we can always shrink the interval $(a,b)$ so that $(b-a)C_{a,b}<e$. For such small intervals, we then have

$$

\left\lvert\Delta_{\frac{b-a}n}^n[f](a)\right\rvert

\leq [(b-a)C_{a,b}]^n n! n^{-n}

$$

which converges to $0$ by Stirling's approximation.

This means that your statement is true for analytic functions, as soon as the interval $(a,b)$ is sufficiently short, in the sense that $(b-a)C_{a,b}<e$. In particular, inside each interval there is an interval in which it holds and for each $x\in\mathbb R$ there is an interval containing $x$ for which it holds.

Improvement

Let $f\in C^\infty(\mathbb R)$ be real analytic and $[a,b]\subset\mathbb R$. If the radius of convergence of $f$ in the entire interval $[a,b]$ is larger than some constant $r$, then $C_{a,b}<r^{-1}$. In particular, if $f$ is the restriction of an entire function, then the statement holds true for all segments $[a,b]$. In general, it holds if the singularity of the analytic extension of $f$ closest to the segment is at a distance greater than $|b-a|/e$.

This leads to an idea to find an example of an analytic function $f:\mathbb R\to\mathbb R$ and an interval $[a,b]$ on which the statement does not hold.

New counterexample

Consider $f(z)=\frac1{i-x}$ defined on $\mathbb C\setminus\{i\}$ and take $[a,b]=[-2,2]$. I claim that the statement does not hold. The radius of convergence at $0$ is $1$, which is not greater than $(2+2)/e$, so the above criterion for the validity of the statement is not applicable.

Work in progress

Old incomplete counterexample

I think I have strong evidence that the generalization is false. The idea is to search for a $C^\infty$ function which is badly approximated by polynomials. The first example that comes to mind is

$$

f(x) = \begin{cases}

0 & x\leq0 \\

e^{-1/x} & x>0.

\end{cases}

$$

Working on the interval $[-1,1]$, define

$$

a_n = \sum_{k=0}^n (-1)^k \binom{n}{k} f\left(-1+\frac{2k}{n}\right).

$$

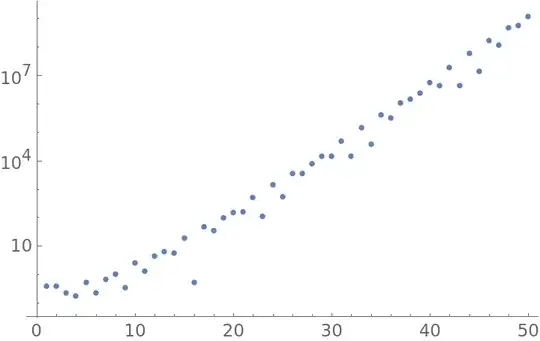

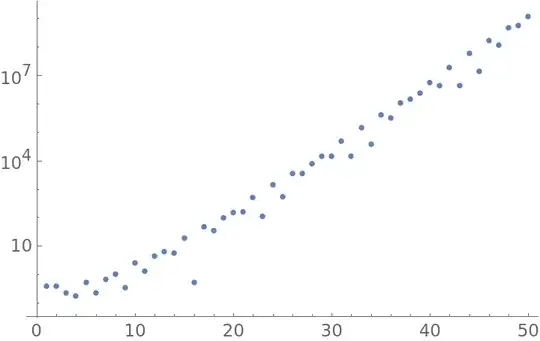

Here is a log-plot of $|a_n|$:

This does not seem to converge to $0$...

I will come back to this question if I manage to prove that this sequence really diverges.