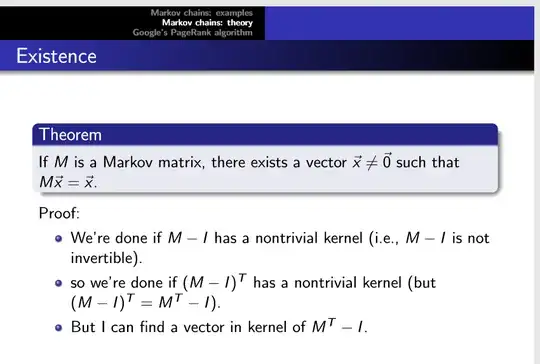

Here's a proof that I found which looks pretty simple but I can't understand the last step. (A Markov matrix is a square matrix whose columns sum to one; $I$ is an identity matrix; $M^T$ and $I^T$ refer to the transpose matrices)

- 23,223

- 489

-

A quick search returns a few similar posts: Is it true that for any square row-stochastic matrix one of the eigenvalues is $1$?, Eigenvector corresponding to eigenvalue $1$ of a stochastic matrix, Proof that the largest eigenvalue of a stochastic matrix is $1$, and probably many others. – Martin Sleziak Aug 15 '18 at 12:34

2 Answers

The determinants of a square matrix and its transpose are identical. This means that their characteristic polynomials are identical, which in turn means that they have the same eigenvalues. When you left-multiply a matrix by a vector, the result is a linear combination of the matrix rows. In particular, left-multiplying by a vector of all $1$s sums the rows of the matrix. Each column of a Markov matrix sums to $1$, therefore $\mathbf 1^TM = \mathbf 1^T$. Transposing, we see that $\mathbf 1$ is an eigenvector of $M^T$ with eigenvalue $1$, therefore $1$ is an eigenvalue of $M$ and there must by definition exist some non-zero vector $\mathbf x$ such that $M\mathbf x=\mathbf x$.

- 55,082

I am not sure about the notations, as you only made a part of the proof available, where the notations are not defined. In fact, I probably use the reverse setup. So long story short, this might not ne a good answer, and there is no way to tell until you make the problem clear.

But if by Markov matrix you mean a non-negative square matrix whose columns (?) add up to 1, then the reason $M^T-I$ has an eigenvector is that the all $1$ vector is trivially a good example.

- 7,488

-

Im so sorry but I didn't really understand that, how do we know that the all 1 vector is an eigenvector? – Vanessa Aug 15 '18 at 07:55

-

Could you provide more details before asking more questions, please? Is my guess about the notations correct? – A. Pongrácz Aug 15 '18 at 07:59

-