This follows from the multivariate chain rule. Let's assume that $E$ depends on $z=(z_1,\ldots,z_n)$, but each $z_k$ depends on $y=(y_1,\ldots,y_m)$, i.e.

$$ E(z(y))=E(z_1(y),\ldots,z_n(y)). $$

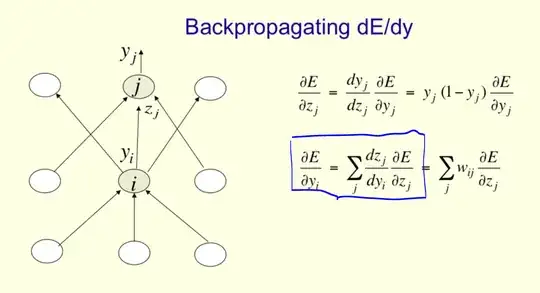

We are interested in how the loss objective $E$ depends on some value in an earlier layer, say the output of the $i$th node, denoted $y_i$, in layer $\xi$, but that output affects $E$ only by its influence on the next layer (i.e., $\xi+1$), whose values we denote $z$.

Recall that in a fully connected network, each layer depends on all the values of the previous layer, so each $z_i$ depends on each $y_k$.

But we only care about $y_i$ for now, meaning we can ignore the other $y_k$, because we are interested in how perturbing $y_i$ affects $E$, and $y_i$ does not affect $E$ via the other $y_k$.

But $y_i$ does affect $E$ through $z$ (and only through $z$ actually).

And $y_i$ affects every $z_k$.

So we need to take the $\partial E / \partial z_k$ into account, as well as the $\partial z_k / \partial y_i$.

The way to combine these into a single number is specified by the multivariate chain rule:

$$ \frac{\partial E}{\partial y_i} = \sum_j \frac{\partial E}{\partial z_j} \frac{\partial z_j}{\partial y_i}. $$

I'll add links to more formal derivations below.

But the rough intuition is this: $E$ depends on $y_i$ through its effect on each $z_k$ (captured by $\partial z_k / \partial y_i$), so ${\partial E}/{\partial y_i}$ should be a sum of these contributions (if we think of derivatives as a measure of local linear change, we can imagine adding the perturbation vectors induced by tweaking together).

But not all the contributions to the network are equally important: if a $z_j$ doesn't affect $E$ much (i.e.,${\partial E}/{\partial z_j}$ is small), then the contribution of $y_i$ through $z_j$ should be down-weighted.

So we do a weighted sum over $\partial z_k / \partial y_i$,

with weights given by ${\partial E}/{\partial z_j}$.

Notice that when $n=1$ we recover the classical chain rule.

For more details see the links below.

Other questions on the origin of the multivariate chain rule are here, here, and here.

A related question on the role of the chain rule sum in artificial neural networks is here.

The Wikipedia article on the chain rule, particularly the section on higher dimensions, explains it a bit better: https://en.wikipedia.org/wiki/Chain_rule#Higher_dimensions

– ConMan Jan 30 '17 at 04:19