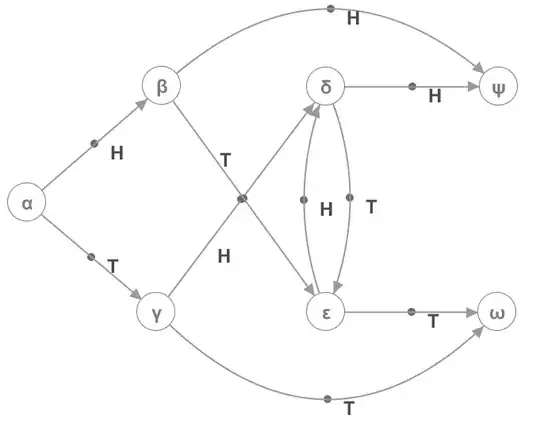

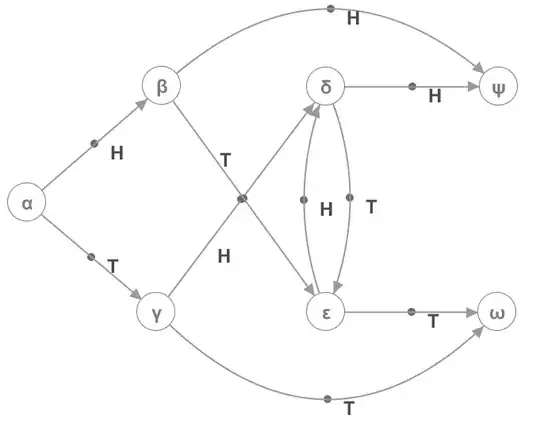

For problems like this, I usually find that it's way easier to graph out the state of the game first, before you attempt to tackle it:

Frustratingly I can't find any decent graph plotting software, but it works like this. Start at node $\alpha$. Each time you flip a coin, look at the edge labelled with that face and follow it to the next node. Keep going until you reach either $\psi$ or $\omega$. Following this graph is identical to playing this game. If you ever toss two consecutive heads, you'll end up in $\psi$. If you ever toss two consecutive tails, you'll end up in $\omega$. If the first toss is a head we'll visit $\beta$ and if the first toss is a tail we'll visit $\gamma$. If the tosses alternate we'll flip-flop between $\delta$ and $\varepsilon$ until we eventually escape. Take a moment to sit and figure out the graph, and possibly draw a better one in your notebook, before you attempt to do anything else with it.

Let $\tilde{\beta}$ be the event that we visit node $\beta$ at some point whilst playing the game. Let $\tilde{\omega}$ be the event that visit $\omega$ at some point. We're now trying to find $\mathbb{P}(\tilde{\beta}|\tilde{\omega})$. A quick Bayesian flip gives us

$$

\mathbb{P}(\tilde{\beta}|\tilde{\omega}) = \frac{\mathbb{P}(\tilde{\omega}|\tilde{\beta})\mathbb{P}(\tilde{\beta})}{\mathbb{P}(\tilde{\omega})}

$$

It's pretty clear that $\mathbb{P}(\tilde{\beta})=\frac{1}{2}$, since the only way for that to happen is for us to get a head on our first throw.

Noting that the coin is fair and the labels "head" and "tail" are completely arbitrary, the probability that we ever reach $\psi$ must equal the probability we ever reach $\omega$. Though I'll leave the proof out, we're guaranteed to eventually reach one of the two, so we must have that $\mathbb{P}(\tilde{\omega})=\frac{1}{2}$, too.

The two halves cancel, so this leaves us with the result that $\mathbb{P}(\tilde{\beta}|\tilde{\omega}) = \mathbb{P}(\tilde{\omega}|\tilde{\beta})$

Let $\alpha, \beta, \gamma, \delta, \epsilon$ be the events that we are currently in the relevant state. Because there's no way back to $\beta$ after leaving it, we have that $\mathbb{P}(\tilde{\omega}|\tilde{\beta})$ is precisely the probability that we eventually reach $\omega$ given that we're currently at $\beta$. Partitioning over transitions out of $\beta$, we have that $\mathbb{P}(\tilde{\omega}|\beta) = \frac{1}{2}(\mathbb{P}(\tilde{\omega}|\psi) + \mathbb{P}(\tilde{\omega}|\varepsilon)) = \frac{1}{2}\mathbb{P}(\tilde{\omega}|\varepsilon)$.

Now, $\mathbb{P}(\tilde{\omega}|\varepsilon) = \frac{1}{2}(\mathbb{P}(\tilde{\omega}|\omega) + \mathbb{P}(\tilde{\omega}|\delta)) = \frac{1}{2} + \frac{1}{2}\mathbb{P}(\tilde{\omega}|\delta)$

Also, by similar logic, $\mathbb{P}(\tilde{\omega}|\delta) = \frac{1}{2}\mathbb{P}(\tilde{\omega}|\varepsilon)$

Plugging these two equalities into each other, we get $\mathbb{P}(\tilde{\omega}|\varepsilon) = \frac{2}{3}$, and so $\mathbb{P}(\tilde{\omega}|\tilde{\beta}) = \frac{1}{3}$

If you're interested, these sorts of things are called Markov Chains, and they're really useful for these sorts of "constantly do random things in sequence" type questions.