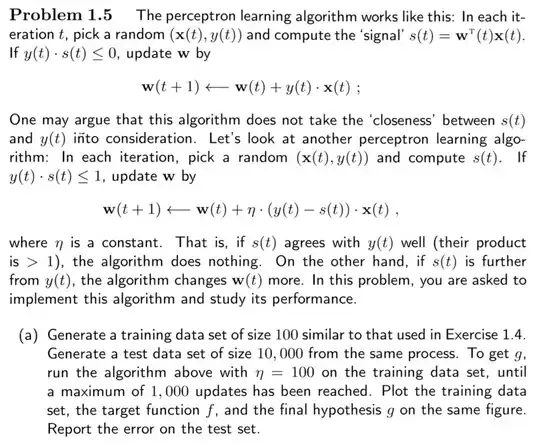

I'm working through the textbook called Learning From Data and one of the problems from the first chapter has the reader implement the Adaline algorithm from scratch and I chose to do so using Python. The issue I'm running into is that the weights for my $\textbf{w}$ immediately blow up to infinity before my algorithm converges. Is there something incorrect I am doing here? It looks like I am implementing it exactly as the text describes. Below I've provided the question and my Python code. Here $\textbf{y}$ takes on the values of -1 and 1. So it is a classification problem.

import numpy as np

import pandas as pd

#Generate w* vector, the true weights

dim=2

wstar=2000*np.random.rand(dim+1)-1000

#Generate the random sample of size 100

trainSize=100

train=pd.DataFrame(2000*np.random.rand(trainSize,dim)-1000)

train['intercept']=np.ones(trainSize)

cols=train.columns.tolist()

cols=cols[-1:]+cols[:-1]

train=train[cols]

#Classify the points

train['y']=np.sign(np.dot(train.iloc[:,0:3],wstar))

#Now we run the ADALINE algorithm on the training data

#Declare w vector

w=np.zeros(dim+1)

#Column of guesses

train['guess']=np.ones(trainSize)

#s column

train['s']=np.dot(train.iloc[:,0:3],w)

#Set eta

eta=5

iterations=0

while (all((train['y']train['s'])>1)==False):

if iterations>=1000:

break

#Picking a random point

randInt=np.random.randint(len(train))

#Temporary values for calculating new w

temp_s=train['s'].iloc[randInt]

temp_x=train.iloc[randInt,0:3]

temp_y=train['y'].iloc[randInt]

#Calculating new w

if temp_ytemp_s<=1:

w=w+eta(temp_y-temp_s)temp_x

#Calculating new guesses and s values

train['s']=np.dot(train.iloc[:,0:3],w)

train['guess']=np.sign(train['s'])

iterations+=1