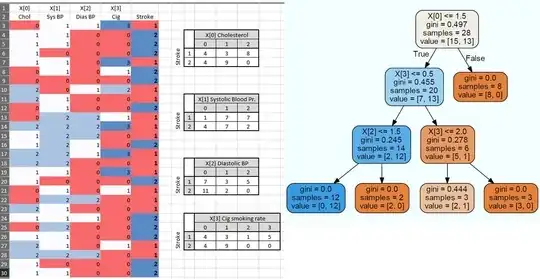

I have a dataset with 4 categorical features (Cholesterol, Systolic Blood pressure, diastolic blood pressure, and smoking rate). I use a decision tree classifier to find the probability of stroke. I am trying to verify my understanding of the splitting procedure done by Python Sklearn. Since it is a binary tree, there are three possible ways to split the first feature which is either to group categories {0 and 1 to a leaf, 2 to another leaf} or {0 and 2, 1}, or {0, 1 and 2}. What I know (please correct me here) is that the chosen split is the one with the highest information gain.

I have calculated the information gain for each of the three grouping scenarios:

{0 + 1 , 2} --> 0.17

{0 + 2 , 1} --> 0.18

{1 + 2 , 0} --> 0.004

However, sklearn's decision tree chose the first scenario instead of the third (please check the picture).

Can anyone please help clarify the reason for selecting the first scenario? is there a priority for splits that results in pure nodes. thus selecting such a scenario although it has less information gain?

I have added the frequency of each class/feature/category so it would be easy to calculate the Gini index