What is the logic of the epoch? For example, 1 time, 2 times, etc. I just do not know what else is working to give better results than I know.

3 Answers

There are two terms which relate to the number of examples while learning. Epoch and iteration. During each epoch, you feed all the examples in your training set and update the network. You can feed the data simultaneously, not for large-scale tasks, or batch by batch. Each turn you pass each batch is called iteration. Consequently, each epoch may have multiple iterations. The reason we have to pass the data multiple times is that we don't know the height of each local optimum along each axis, feature. Consequently, we use a learning rate to limit our steps to small ones and take multiple steps to get close to the desired point.

Due to the request of one of our friends I add the explicit definitions:

- Epoch: It means how many times the entire dataset has been passed through the network.

- Iteration: It means for each epoch, how many times you have passed the chunks of the dataset. These chunks of data are called batch and the number of times you pass them through the network is called the number of iterations.

- 14,308

- 10

- 59

- 98

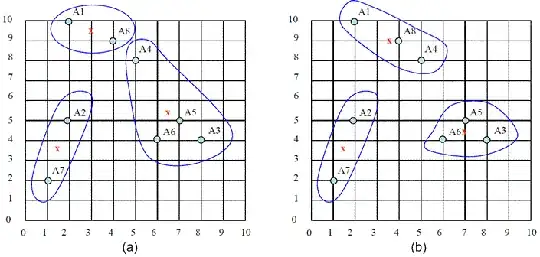

In a simple way, Epoch is an iteration. For example, in k-means clustering, in each epoch you get better clusters.

After a successful epoch the clusters in (a) are approximated better to clusters in (b).

- 414

- 3

- 8

An epoch is one loop to train a model with a batch(Batch Gradient Descent(BGD)), every mini-batch(Mini-Batch Gradient Descent(MBGD)) or every sample(Stochastic Gradient Descent(SGD)) of a dataset, taking one or more steps to update model's parameters by Gradient Descent(GD). *Basically, an epoch is repeated so that a model can learn deeper.

- 165

- 7