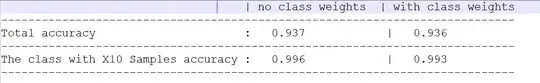

Applying class weights (or resampling) to reach a more-balanced training dataset is usually done specifically to get more predictions of the rarer classes. That will very often cause a decrease in overall accuracy, because it moves many predictions from the majority class to others. But you expect to get out of it an improvement in other metrics, ones that you care more about, like recall of the minority classes.

See What is the root cause of the class imbalance problem? and its Linked posts for more about class imbalance, but I will point out that

- small "real" improvements can be gained by modifying imbalance

- everything is more complicated for multiclass

More data is basically always an answer, but if you collect data from just one class, you are manipulating the class imbalance again. It's best, at least as a starting point, to reproduce the class distribution faithfully (to the production dataset / population) in the training set. Then you can expect that predicted probabilities are roughly calibrated, and make the hard-class classification decisions based on those probabilities. If you want to modify weights or samples, then keep in mind the impact that will have on the calibration and hence your decisions.