I have been reading Jurafsky's Chapter 14: Question Answering, Information Retrieval, and RAG section on precision and recall and followed up with this video to understand the 2 metrics, interpolation and average precision.

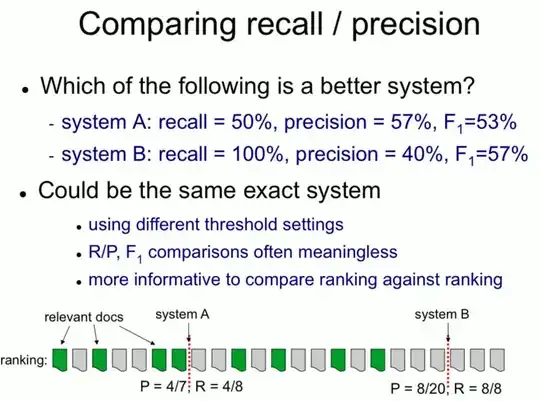

This videoEvaluation 9: when recall/precision is misleading was an eye-opener as I didn't understand precision and recall when it came to ranking.

The video description says the following but does not mention what thresholding strategies exist.

Recall / precision pairs should not be used for comparing two search algorithms, because search engines output rankings, not sets. Different recall/precision pairs can be observed at different points in the ranking, so any comparison is meaningless unless we pre-specify a thresholding strategy.

It is only at 3:42 that a student asks a question of having no false negatives (This is gathered from what the professor repeats). This is in a citation block but it is paraphrased from the YouTube transcript.

If you have a constraint that recall has to be a 100% or even 90 % or even 80 % that gives you extra information - that basically tells you how the thresholds are going to be set so then you're just looking at precision and yes the comparison is valid it's only in cases where recall is unconstrained.

- How could one not get any false negatives with this example?

- Are there any other strategies?

P.S. My ideal tags would have been precision, recall, threshold strategies but I don't have enough points to create them.