In CNNs, I understand how convolution works and how it gradually reduces spatial resolution but increases the channel dimension. E.g. an RGB image of 100x100x3 after a few convolution layers may result in a layer that is 15x15x512 (i.e. 15x15 with 512 channels).

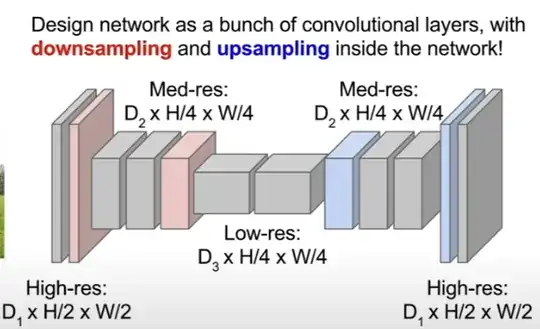

Using CNNs for semantic segmentation, we also do upsampling with transposed convolutions. See below an excerpt of a slide from Stanford's CS231 course. In transposed convolutions, we expand the spatial resolution and reduce the channel dimension. For example, we may go from a 15x15x512 layer to a 100x100x3 layer.

I understand 2D convolutions, so I get how the spatial resolution is growing. But I don't understand how the 512 channels are being reduced to 3. Is there some kind of pooling of channels happening? Or is there some other mechanism?