I have generated dataset using chat gpt. Dataset has 9000 data recodes. It's 6 class sentiment analysis. classes are 0,1,2,3,4,5 I used 3000 recodes for training, 1200 recods for validation and testing.

This is the class counts

For training:

5: 622 ,3: 614 ,0: 593,4: 571,2: 604,1: 596

For Validation:

1: 221,5: 193,0: 193,4: 212,3: 182,2: 199

For Testing:

3: 204,2: 197,0: 214,4: 217,1: 183,5: 185

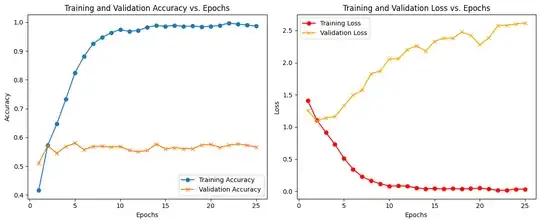

I trained this using BERT('bert-base-uncased') with 25 epochs. Learning rate 2e-5

This is the result.

Validation

Validation Accuracy: 0.5666666666666667

Validation Classification Report:

precision recall f1-score support

0 0.53 0.69 0.60 193

1 0.54 0.50 0.52 221

2 0.52 0.49 0.51 199

3 0.54 0.50 0.52 182

4 0.55 0.60 0.57 212

5 0.77 0.63 0.69 193

accuracy 0.57 1200

macro avg 0.58 0.57 0.57 1200

weighted avg 0.57 0.57 0.57 1200

Testing

Test Accuracy: 0.5658333333333333

Test Classification Report:

precision recall f1-score support

0 0.57 0.68 0.62 214

1 0.49 0.56 0.52 183

2 0.58 0.52 0.55 197

3 0.59 0.49 0.53 204

4 0.53 0.57 0.55 217

5 0.68 0.58 0.62 185

accuracy 0.57 1200

macro avg 0.57 0.56 0.57 1200

weighted avg 0.57 0.57 0.57 1200

I trained this with using different count of data. but graph shape is same with different accuracies. My questions are:

- how to increase accuracy and what whould be the issue?

- Is that a issue with some words in multiple classes (because i some words in many classes in this dataset)?