As we most know that, Attention is focuses on specific parts of the input sequence those are most relevant in generating output sequence.

Ex: The driver could not drive the car fast because it had a problem.

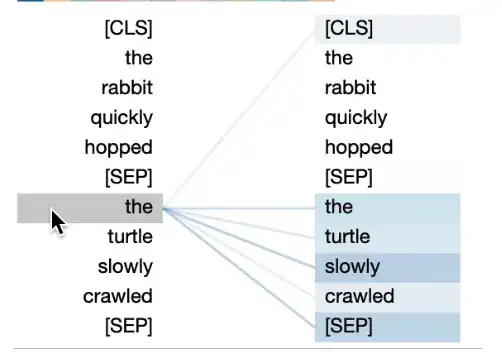

how attention finds the specific parts(here it is 'it') in input and how it will assign score for the token?

is attention context- based model?

how to obtain attention maps (query, key, value)?

On what basis attention assigns higher weights to input tokens?