Great questions!

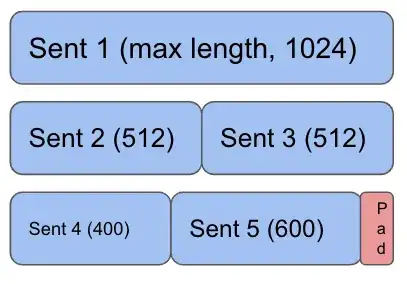

When training a model with batches of data with padded inputs, the padded tokens will take up GPU memory. This is because the padding zeros are still being stored in memory, even if they are not being computed by the model. Therefore, padding can be wasteful, especially when dealing with large datasets.

However, PyTorch and TensorFlow both have optimizations to avoid this wastage.

Both PyTorch and TensorFlow have functions for packing and unpacking padded sequences. In PyTorch, the pack_sequence and pad_packed_sequence functions can be used to pack a list of variable-length sequences into a single padded tensor and then unpack the padded tensor back into a list of variable-length sequences. In TensorFlow, the tf.RaggedTensor class can be used to represent variable-length sequences and the tf.ragged.packed_batch function can be used to pack a batch of variable-length sequences into a single padded tensor.

Here's an example of using PackedSequence in PyTorch:

import torch

from torch.nn.utils.rnn import pack_sequence

sentences = ['this is a short sentence', 'this is a longer sentence that requires padding']

convert each sentence to a tensor

sentences = [torch.tensor([tokenizer.encode(sentence)]) for sentence in sentences]

pack the tensors into a PackedSequence

packed = pack_sequence(sentences, enforce_sorted=False)

use the packed sequence in your model

output, hidden = lstm(packed)

Here's an example of using RaggedTensor in TensorFlow:

import tensorflow as tf

sentences = ['this is a short sentence', 'this is a longer sentence that requires padding']

convert each sentence to a tensor

sentences = [tf.constant(tokenizer.encode(sentence)) for sentence in sentences]

create a ragged tensor from the tensors

ragged = tf.ragged.constant(sentences)

use the ragged tensor in your model

output, hidden = lstm(ragged)

Using PackedSequence or RaggedTensor can be more memory-efficient than padding, especially when dealing with variable-length sequences. Additionally, as you mentioned, you can also use smart batching techniques to further optimize memory usage.

Regarding dynamic batching without sorting, one approach is to use bucketing. Bucketing involves sorting the sequences by length and then dividing them into buckets of similar length. The sequences within each bucket can be padded to the length of the longest sequence in the bucket, and the batches can be formed by randomly selecting a bucket and then randomly selecting a sequence from the bucket.

As for dynamic batching without sorting, there are existing implementations in deep learning libraries, such as DynamicPaddingBucketingSampler in MXNet, torch.utils.data.DataLoader class in PyTorch supports bucketing and windowing, and the tf.data.Dataset API in TensorFlow supports similar functionality. There are also third-party libraries such as torchtext for PyTorch and tensorflow-datasets for TensorFlow that provide pre-defined datasets and data processing pipelines with support for dynamic batching.

There are also libraries that provide dynamic batching functionality out of the box, such as NVIDIA's TensorRT and Google's Lingvo. These libraries use dynamic batching and other techniques to optimize the use of GPU memory and improve training efficiency.

References