Thank Abhishek. I've figure it out! Here are my experiments.

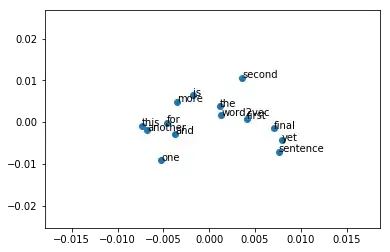

1). we plot a easy example:

from gensim.models import Word2Vec

from sklearn.decomposition import PCA

from matplotlib import pyplot

# define training data

sentences = [['this', 'is', 'the', 'first', 'sentence', 'for', 'word2vec'],

['this', 'is', 'the', 'second', 'sentence'],

['yet', 'another', 'sentence'],

['one', 'more', 'sentence'],

['and', 'the', 'final', 'sentence']]

# train model

model_1 = Word2Vec(sentences, size=300, min_count=1)

# fit a 2d PCA model to the vectors

X = model_1[model_1.wv.vocab]

pca = PCA(n_components=2)

result = pca.fit_transform(X)

# create a scatter plot of the projection

pyplot.scatter(result[:, 0], result[:, 1])

words = list(model_1.wv.vocab)

for i, word in enumerate(words):

pyplot.annotate(word, xy=(result[i, 0], result[i, 1]))

pyplot.show()

From the above plots, we can see that easy sentences cannot distinguish different words' meaning by distances.

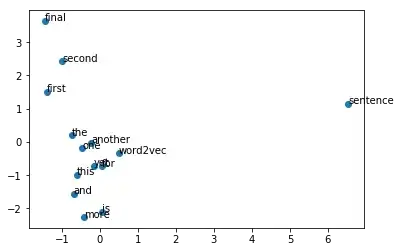

2). Load pre-trained word embedding:

from gensim.models import KeyedVectors

model_2 = Word2Vec(size=300, min_count=1)

model_2.build_vocab(sentences)

total_examples = model_2.corpus_count

model = KeyedVectors.load_word2vec_format("glove.6B.300d.txt", binary=False)

model_2.build_vocab([list(model.vocab.keys())], update=True)

model_2.intersect_word2vec_format("glove.6B.300d.txt", binary=False, lockf=1.0)

model_2.train(sentences, total_examples=total_examples, epochs=model_2.iter)

# fit a 2d PCA model to the vectors

X = model_2[model_1.wv.vocab]

pca = PCA(n_components=2)

result = pca.fit_transform(X)

# create a scatter plot of the projection

pyplot.scatter(result[:, 0], result[:, 1])

words = list(model_1.wv.vocab)

for i, word in enumerate(words):

pyplot.annotate(word, xy=(result[i, 0], result[i, 1]))

pyplot.show()

From the above figure, we can see that word embeddings are more meaningful.

Hope this answer will be helpful.