I'm planning to implement a lexical analyzer by either simulating NFA or running DFA using the input text. The trouble is, the input may arrive in small chunks and the memory may not be enough to hold one very long token in the memory.

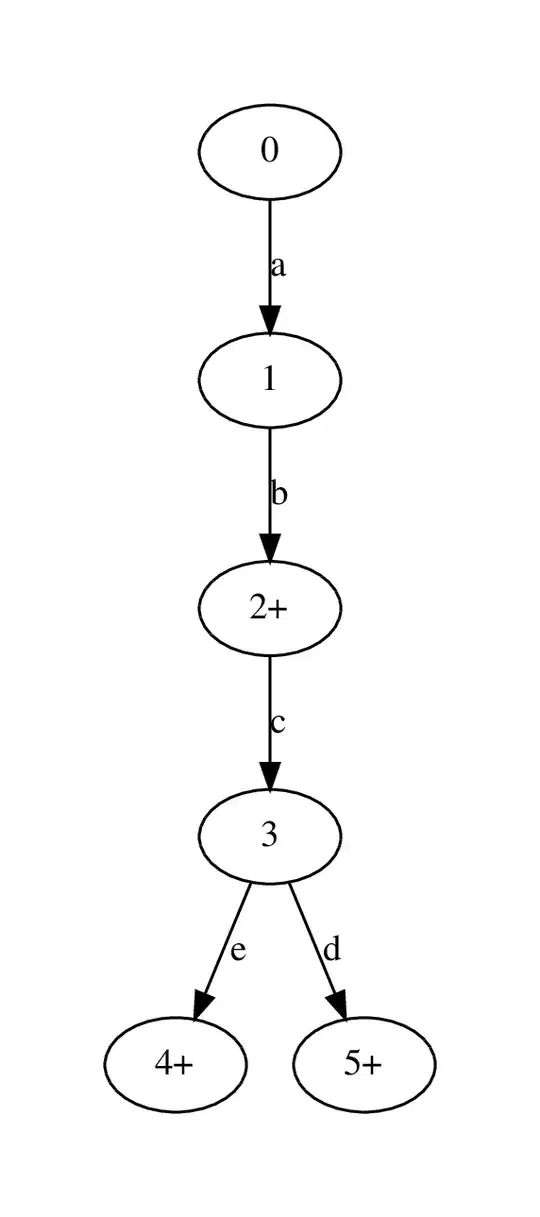

Let's assume I have three tokens, "ab", "abcd" and "abce". The NFA I obtained is this:

And the DFA I obtained is this:

Now if the input is "abcf", the correct action would be to read the token "ab" according to the maximal munch rule and then produce a lexer error token. However, both the DFA and the NFA have state transitions even after "ab" has been read. Thus, the maximal munch rule encourages to keep on reading after "ab" and read the "c" as well.

How do maximal munch lexers solve this issue? Do they store the entire token in memory and do backtracking from "abc" to "ab"?

One possibility would be to run the DFA with a "generation index", potentially multiple generations and multiple branches within generation at a time. So, the DFA would go from:

{0(gen=0,read=0..0)},

read "a",

{1(gen=0,read=0..1)},

read "b",

{2+(gen=0,read=0..2,frozen), 2+(gen=0,read=0..2), 0(gen=1,read=2..2)},

read "c",

{2+(gen=0,read=0..2,frozen), 3(gen=0,read=0..3)},

read "f",

{2+(gen=0,read=0..2,frozen)}.

Then the lexer would report state 2+, and since there is no option to continue, would report an error state. Not sure how well this idea would work...

For "abcd", it would work like this:

{0(gen=0,read=0..0)},

read "a",

{1(gen=0,read=0..1)},

read "b",

{2+(gen=0,read=0..2,frozen), 2+(gen=0,read=0..2), 0(gen=1,read=2..2)},

read "c",

{2+(gen=0,read=0..2,frozen), 3(gen=0,read=0..3)},

read "d",

{2+(gen=0,read=0..2,frozen), 4+(gen=0,read=0..4,frozen), 4+(gen=0,read=0..4), 0(gen=1,read=4..4)}.

Now of these, it's possible to drop the first (there is a longer match) and the third (there are no state transitions out), leaving:

{4+(gen=0,read=0..4,frozen), 0(gen=1,read=4..4)}.

Then the lexer would indicate "match: 4+" and continue reading input from state 0 using generation index 1.

Is this idea of mine, running DFAs nondeterministically, how maximal munch lexical analyzers work?