I have been studying Andrew Ng's Coursera course about Deep Learning. In that he mentions that we calculate the activation functions during the forward pass, and the derivatives $\dfrac{dL}{dz} \ $, $\dfrac{dL}{dw} \ $, and $\dfrac{dL}{db} \ $ during the backward pass.

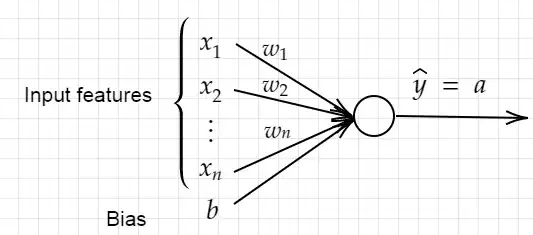

Here, $x_{1} \ ,\ x_{2} \ ,\ ...\ ,\ x_{n}$ are our inputs to the node

$b$ is the bias

$w_{1} ,\ w_{2} ,\ ...\ ,\ w_{n}$ are the weights associated with the inputs

$\hat{y}$ or $a$ is our activation function where $a\ =\ \sigma ( z) \ =\frac{1}{1\ +\ e^{-z}} \ $

$z\ =\ w_{1} x_{1} +w_{2} x_{2} +\dots +w_{n} x_{n} +\ b$

The term $L\ ( a,y) \ =\ -\{\ y\log a\ +\ ( 1-y)\log( 1-a) \ \}$ is our loss function

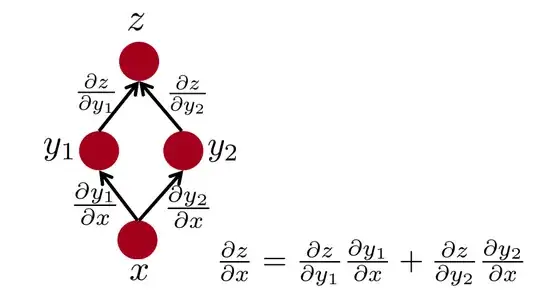

The way Andrew calculates the derivatives is as follows:

$ \begin{gathered} \dfrac{dL}{dw} \ =\ \dfrac{dL}{dz} \cdot \dfrac{dz}{dw}\\ \\ \dfrac{dL}{dz} \ =\ \dfrac{dL}{da} \cdot \dfrac{da}{dz}\\ \end{gathered}$

We back-propagate to calculate

$\dfrac{dL}{da}\\$ (1-step back)

$\dfrac{da}{dz}\\$ and $\dfrac{dL}{dz} \ =\ \dfrac{dL}{da} \cdot \dfrac{da}{dz}\\$ (2-step back)

$\dfrac{dz}{dw}\\$ and $\dfrac{dL}{dw} \ =\ \dfrac{dL}{dz} \cdot \dfrac{dz}{dw}\\$ (3-step back)

However, my question is, why can't we calculate these derivatives during the forward pass itself? Like so,

$\dfrac{dL}{dw} \ =\ \dfrac{dL}{da} \cdot \dfrac{da}{dz} \cdot \dfrac{dz}{dw}\\$

Step 1: Calculate $z\\$ as well as $\dfrac{dz}{dw}\\$

Step 2: Calculate $a\\$ as well as $\dfrac{da}{dz}\\$ and $\dfrac{da}{dw} \ =\ \dfrac{da}{dz} \cdot \dfrac{dz}{dw}\\$

Step 3: Calculate $L\\$ as well as $\dfrac{dL}{da}\\$ and $\dfrac{dL}{dw} \ =\ \dfrac{dL}{da} \cdot \dfrac{da}{dw}\\$

Is there any reason why we take the back-prop approach, and not calculate both sets of functions together during forward prop?

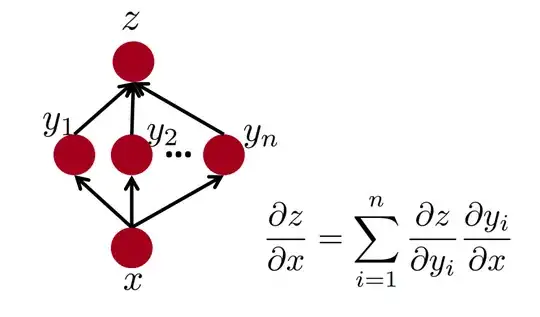

EDIT: For a network with $n$ layers, we can calculate the weight derivative for a layer $j$, as:

$ \begin{gathered} \dfrac{dL}{dw^{[ j]}} =\dfrac{dL}{da^{[ n]}} \cdot \dfrac{da^{[ n]}}{dz^{[ n]}} \cdot \dfrac{dz^{[ n]}}{da^{[ n-1]}} \dots \dfrac{dz^{[ j+1]}}{da^{[ j]}} \cdot \underbrace{\dfrac{da^{[ j]}}{dz^{[ j]}} \cdot \dfrac{dz^{[ j]}}{dw^{[ j]}}}_{combine\ the\ two}\\ \\ =\dfrac{dL}{da^{[ n]}} \cdot \dfrac{da^{[ n]}}{dz^{[ n]}} \cdot \dfrac{dz^{[ n]}}{da^{[ n-1]}} \dots \underbrace{\dfrac{dz^{[ j+1]}}{da^{[ j]}} \cdot \dfrac{da^{[ j]}}{dw^{[ j]}}}_{combine\ the\ two}\\ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \vdots \\ \\ =\underbrace{\dfrac{dL}{da^{[ n]}} \cdot \dfrac{da^{[ n]}}{dw^{[ j]}}}\\ \end{gathered}$

As can be seen, any layer's derivative can be calculated without the need to backtrack

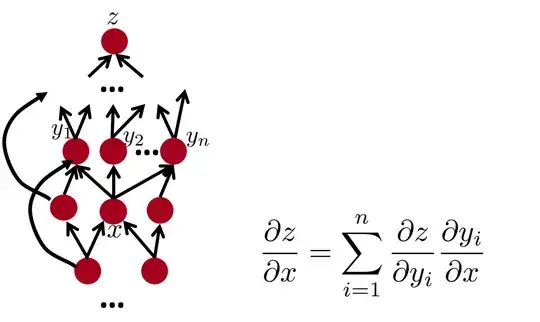

Edit 2: Let's take a 2 layer network

$a^{[ 0]} =\left[\begin{smallmatrix} x_{1}\\ x_{2}\\ \vdots \\ x_{n} \end{smallmatrix}\right]$

$a^{[ 1]} =\left[\begin{smallmatrix} a^{[ 1]}_{1}\\ a^{[ 1]}_{2}\\ \vdots \\ a^{[ 1]}_{m} \end{smallmatrix}\right]$

$w^{[ 1]} =\left[\begin{smallmatrix} n\ weights\ for\ layer\ 1\ node\ 1\\ n\ weights\ for\ layer\ 1\ node\ 2\\ \vdots \\ n\ weights\ for\ layer\ 1\ node\ m \end{smallmatrix}\right] =\left[\begin{smallmatrix} w^{[ 1]}_{1}\\ w^{[ 1]}_{2}\\ \vdots \\ w^{[ 1]}_{m} \end{smallmatrix}\right] =\left[\begin{smallmatrix} w^{[ 1]}_{1( 1)} & w^{[ 1]}_{1( 2)} & \dots & w^{[ 1]}_{1( n)}\\ w^{[ 1]}_{2( 1)} & w^{[ 1]}_{2( 2)} & \dots & w^{[ 1]}_{2( n)}\\ \vdots & \vdots & \dots & \vdots \\ w^{[ 1]}_{m( 1)} & w^{[ 1]}_{m( 2)} & \dots & w^{[ 1]}_{m( n)} \end{smallmatrix}\right]$

Step 1: For the first layer: $z^{[ 1]} \ =\ w^{[ 1] T} a^{[ 0]} =\left[\begin{smallmatrix} z^{[ 1]}_{1}\\ z^{[ 1]}_{2}\\ \vdots \\ z^{[ 1]}_{m} \end{smallmatrix}\right]$

$a^{[ 1]} =\sigma \left( z^{[ 1]}\right) =\left[\begin{smallmatrix} \sigma \left( z^{[ 1]}_{1}\right)\\ \sigma \left( z^{[ 1]}_{2}\right)\\ \vdots \\ \sigma \left( z^{[ 1]}_{m}\right) \end{smallmatrix}\right]$

$\dfrac{\partial a^{[ 1]}}{\partial w^{[ 1]}} \ =\left[\begin{smallmatrix} \dfrac{\partial a}{\partial w} \ for\ layer\ 1\ node\ 1\ \\ \dfrac{\partial a}{\partial w} \ for\ layer\ 1\ node\ 2\\ \vdots \\ \dfrac{\partial a}{\partial w} \ for\ layer\ 1\ node\ m \end{smallmatrix}\right] =\left[\begin{smallmatrix} \dfrac{\partial a^{[ 1]}_{1}}{\partial w^{[ 1]}_{1}}\\ \dfrac{\partial a^{[ 1]}_{2}}{\partial w^{[ 1]}_{2}}\\ \vdots \\ \dfrac{\partial a^{[ 1]}_{m}}{\partial w^{[ 1]}_{m}} \end{smallmatrix}\right]$

$=\left[\begin{smallmatrix} \dfrac{\partial a^{[ 1]}_{1}}{\partial w^{[ 1]}_{1( 1)}} & \dfrac{\partial a^{[ 1]}_{1}}{\partial w^{[ 1]}_{1( 2)}} & \dots & \dfrac{\partial a^{[ 1]}_{1}}{\partial w^{[ 1]}_{1( 2)}}\\ \dfrac{\partial a^{[ 1]}_{2}}{\partial w^{[ 1]}_{2( 1)}} & \dfrac{\partial a^{[ 1]}_{2}}{\partial w^{[ 1]}_{2( 2)}} & \dots & \dfrac{\partial a^{[ 1]}_{2}}{\partial w^{[ 1]}_{2( n)}}\\ \vdots & \vdots & \dots & \vdots \\ \dfrac{\partial a^{[ 1]}_{m}}{\partial w^{[ 1]}_{m( 1)}} & \dfrac{\partial a^{[ 1]}_{m}}{\partial w^{[ 1]}_{m( 2)}} & \dots & \dfrac{\partial a^{[ 1]}_{m}}{\partial w^{[ 1]}_{m( n)}} \end{smallmatrix}\right] =\left[\begin{smallmatrix} a^{[ 1]}_{1}\left( 1-a^{[ 1]}_{1}\right) \ x_{1} & a^{[ 1]}_{1}\left( 1-a^{[ 1]}_{1}\right) x_{2} & \dots & a^{[ 1]}_{1}\left( 1-a^{[ 1]}_{1}\right) x_{n}\\ a^{[ 1]}_{2}\left( 1-a^{[ 1]}_{2}\right) x_{1} & a^{[ 1]}_{2}\left( 1-a^{[ 1]}_{2}\right) x_{2} & \dots & a^{[ 1]}_{2}\left( 1-a^{[ 1]}_{2}\right) x_{n}\\ \vdots & \vdots & \dots & \vdots \\ a^{[ 1]}_{m}\left( 1-a^{[ 1]}_{m}\right) x_{1} & a^{[ 1]}_{m}\left( 1-a^{[ 1]}_{m}\right) x_{2} & \dots & a^{[ 1]}_{m}\left( 1-a^{[ 1]}_{m}\right) x_{n} \end{smallmatrix}\right]$

Step 2: Moving to the second layer. All the derivatives from first layer get passed on to the second layer, and using them we calculate:

$\dfrac{\partial a^{[ 2]}}{\partial w^{[ 1]}} =\dfrac{\partial a^{[ 2]}}{\partial z^{[ 2]}} \cdot \dfrac{\partial z^{[ 2]}}{\partial a^{[ 1]}} \cdot \dfrac{\partial a^{[ 1]}}{\partial w^{[ 1]}} =a^{[ 2]}\left( 1-a^{[ 2]}\right) \cdot w^{[ 2]} .\dfrac{\partial a^{[ 1]}}{\partial w^{[ 1]}}$

$\dfrac{\partial L}{\partial w^{[ 1]}} =\dfrac{\partial L}{\partial a^{[ 2]}} \cdot \dfrac{\partial a^{[ 2]}}{\partial w^{[ 1]}}$

Image credits: https://www.mathcha.io