This Question is taken from the book Neural Networks and DeepLearning by Michael Nielsen

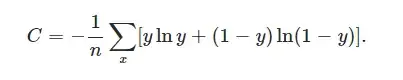

The Question: In a single-neuron ,It is argued that the cross-entropy is small if σ(z)≈y for all training inputs. The argument relied on y being equal to either 0 or 1. This is usually true in classification problems, but for other problems (e.g., regression problems) y can sometimes take values intermediate between 0 and 1. Show that the cross-entropy is still minimized when σ(z)=y for all training inputs. When this is the case the cross-entropy has the value:

C is the cost function

σ is the sigmoid function

y is the expected output of the network

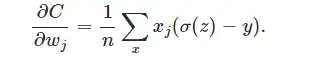

I am not sure if I understand the question correctly.If σ(z)=y wouldn't the partial derivative of C with respect to the weights(or Bias) be equal to zero?This will imply that the weight is already at the correct value and the cost function cannot be reduced any further by changing the weight.

z is the weighted input to the neuron.(z=wx+b)

Where b is the bias and x is the activation of a neuron in the previous layer.(In this case it is the input to the network itself)

w is the weight value