I don't know if there is a canonical problem reducing my practical problem, so I will just try to describe it the best that I can.

I would like to cluster files into the specified number of groups, where each groups size (= the sum of sizes of files in this group) is as close as possible to each other group in this cluster (but not in other clusters). Here are the requirements:

- The first group always contain one file, which is the biggest of all groups in the cluster.

- Any other group but the first can have multiple files in it.

- The number of groups in each cluster is constrained to a maximum specified by user (but there can be less groups if it's better or there's not enough files).

- There's no constraint on the number of clusters (there can be as little or as many as necessary).

- Goal (objective function): minimize the space left in all groups (of all clusters) while maximizing the number of groups per cluster (up to the maximum specified).

The reason behind these requirements is that I am encoding files together, and any remaining space in any group will need to be filled by null bytes, which is a waste of space.

Clarification on the objective and constraints that follow from the requirements and the problem statement:

- Input is a list of files with their respective size.

- Desired output: a list of clusters with each clusters being comprised of groups of files, each group having one or several concatenated files.

- There must be at least 2 groups per cluster (except if no file is remaining) and up to a maximum of G groups (specified by user).

- Each file can be assigned to any group whatsoever and each group can be assigned to any cluster.

- The number of clusters can be chosen freely.

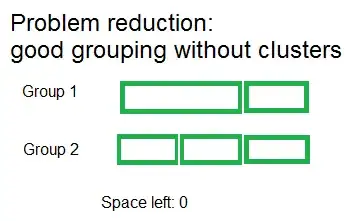

Here is a schema that shows one wrong and one good example of clustering schemes on 5 files (1 big file, and 4 files of exactly half the size of the big file) with a number of groups = 2:

The solution needs not be optimal, it can be sub-optimal as long as it's good enough, so greedy/heuristics algorithms are acceptable as long as their complexity is good enough.

Another concrete example to be clear: let's say I have this list of 10 files with their sizes, this is the input (in Python):

['file_3': 7,

'file_8': 11,

'file_6': 14,

'file_9': 51,

'file_1': 55,

'file_4': 58,

'file_5': 67,

'file_2': 68,

'file_7': 83,

'file_0': 85]

The final output is a list of clusters like this (constrained here to 3 groups per cluster):

{1: [['file_0'], ['file_7'], ['file_2', 'file_6']],

2: [['file_5'], ['file_4', 'file_3'], ['file_1', 'file_8']],

3: [['file_9']]}

And for example here (this is not a necessary output, it's just to check) the total size of each groups (ie, sum of file sizes for each group) for each cluster:

{1: [85, 83, 82], 2: [67, 65, 66], 3: [51]}

If the problem is NP-complete and/or impossible to solve in polynomial time, I can accept a solution to a reduction of the problem, dropping the first and fourth requirements (no clusters at all, only groups):

Here is the algorithm I could come up with for the full problem, but it's running in about O(n^g) where n is the length of the list of files, and g the number of groups per cluster:

Input: number of groups G per cluster, list of files F with respective sizes

- Order F by descending size

- Until F is empty:

- Create a cluster X

- A = Pop first item in F

- Put A in X[0] (X[0] is thus the first group in cluster X)

For g in 1..len(G)-1 :

- B = Pop first item in F

- Put B in X[g]

- group_size := size(B)

If group_size != size(A):

While group_size < size(A):

- Find next item C in F which size(C) <= size(A) - group_size

- Put C in X[g]

- group_size := group_size + size(C)

How can I do better? Is there a canonical problem? It seems like it's close to scheduling of parallel tasks (with tasks instead of files and time instead of size), but I'm not quite sure? Maybe a divide-and-conquer algorithm exists for this task?