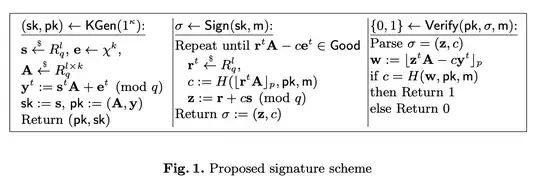

My understanding of the Fiat-Shamir With Aborts Signature Scheme is as follows. We calculate the signature $z = cs +y$, with $s$ being the secret key, and $c$ being the challenge. We need $y$ to hide $cs$, so that $z$'s distribution is indistinguishable from a uniform distribution. If both $cs$ and $y$ are from some finite group $G$ (say the $\mathbb{Z}_{N}$), then there is no rejection step needed: We simply sample $y$ uniformly at random from $G$, and then $cs +y$ also appears uniformly random from $G$ (without knowledge of $s$).

The paper that introduces the paradigm then explains that this uniform sampling from $y$ cannot be done for lattice. This is what I do not understand yet. In the lattice-based signature scheme, both $s$, $c$, and $y$ are from (subsets of) the ring $R = \mathbb{Z}_p[x] / \langle X^n+1\rangle$. But it should be possible to sample uniformly at random from that ring, correct?

The paper also explains that $y$ needs to be sampled from a small range as picking $y$ from a large range would "ange because doing so would require us to make a much stronger complexity assumption which would significantly decrease the efficiency of the protocol".

Why would $y$ being picked from a larger range influence the complexity assumption? Is that because for a larger $y$, it is easier to find a pre-image in the hash-function $h(y) = \langle a,y\rangle$